Detect and Track Face on Android Device

This example shows how to detect faces captured by an Android® camera using Simulink® Support Package for Android Devices. The model in this example tracks the face even when the person tilts the head, or moves toward or away from the camera.

Introduction

Object detection and tracking are important in many computer vision applications, including activity recognition, automotive safety, and surveillance. In this example, you will use a Simulink model to detect a face in a video frame, identify the facial features, and track these features. The output video frame contains the detected face and the tracked features in a bounding box. If the face is not visible or goes out of focus, the model tries to reacquire the face and then perform the tracking. The model in this example is designed to detect and track only one face at a time.

Example Model

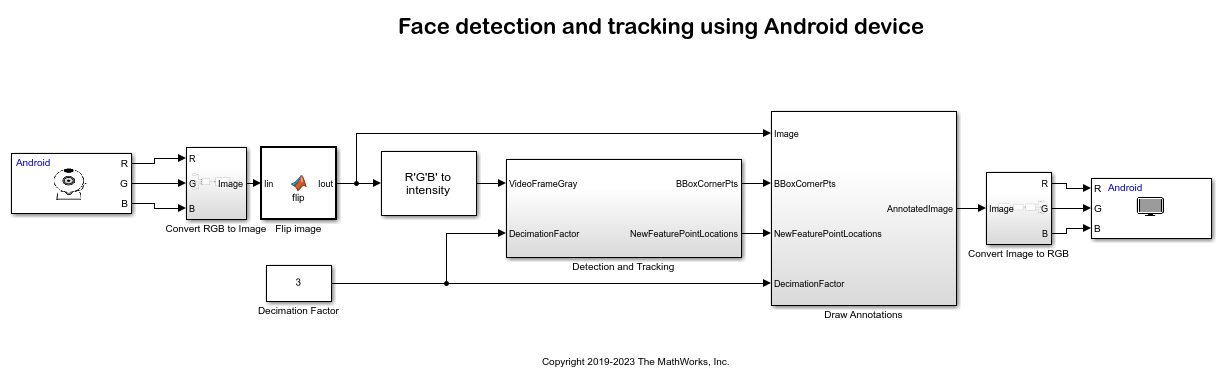

Open the androidFaceDetectionAndTracking Simulink model.

This example uses the Camera block to capture video frames from the back camera of an Android device. The Detection and Tracking subsystem takes in the video frame to create a bounding box and feature points for the detected face. The Draw Annotations subsystem then inserts a rectangle around the corner points of the bounding box and also inserts markers for the feature points inside the box. The output of the Draw Annotations subsystem is then sent to the Video Display block to output the detected face on the device screen.

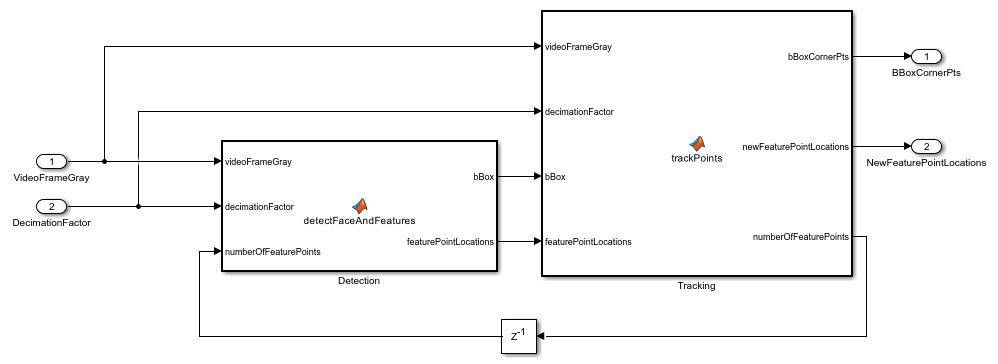

Detection and Tracking

In this example, the vision.CascadeObjectDetector (Computer Vision Toolbox) System object™ detects the location of the face in the captured video frame. The cascade object detector uses the Viola-Jones detection algorithm and a trained classification model for detection. After the face is detected, facial feature points are identified using the Good Features to Track method proposed by Shi and Tomasi.

The vision.PointTracker (Computer Vision Toolbox) System object tracks the identified feature points by using the Kanade-Lucas-Tomasi (KLT) feature-tracking algorithm. For each point in the previous frame, the point tracker attempts to find the corresponding point in the current frame. The estimateGeometricTransform2D (Computer Vision Toolbox) function then estimates the translation, rotation, and scale between the old points and the new points. This transformation is applied to the bounding box around the face.

Although it is possible to use the cascade object detector on every frame, it is computationally expensive to do so. This technique can also at times fail to detect the face, such as when the subject turns or tilts the head. This limitation is a result of the type of trained classification model used for detection. In this example, you detect the face once, and then the KLT algorithm tracks the face across the video frames. The detection is performed again only when the face is no longer visible or when the tracker cannot find enough feature points.

The ability to perform Dynamic memory allocation in MATLAB functions enables you to use the System objects and functions in the MATLAB Function block.

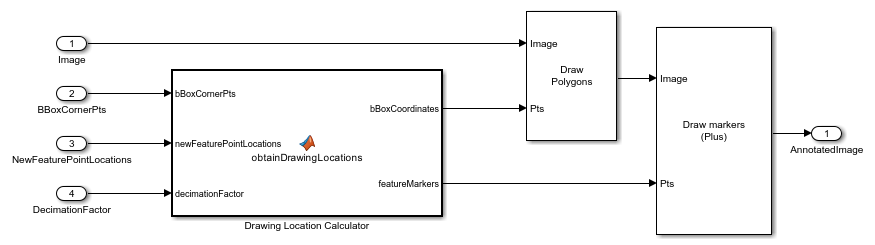

Draw Annotations

The Draw Shapes (Computer Vision Toolbox) block draws the bounding box corner points and the Draw Markers (Computer Vision Toolbox) block draws the feature points.

Step 1: Configure Face Detection Model

1. In the androidFaceDetectionAndTracking Simulink model, on the Modeling tab of the toolstrip, select Model Settings.

2. In the Configuration Parameters dialog box, select Hardware Implementation. Verify that the Hardware board parameter is set to Android Device.

3. From the Groups list under Target hardware resources, select Device options.

4. From the Device list, select your Android device. If your device is not listed, click Refresh.

Note: If your device is not listed even after clicking Refresh, ensure that you have enabled the USB debugging option on your device. To enable USB debugging, enter androidhwsetup in the MATLAB® Command Window and follow the onscreen instructions.

Step 2: Deploy the Face Detection Model on Android Device

1. On the Hardware tab of the Simulink model, in the Mode section, select Run on board and then click Build, Deploy & Start. This action builds, downloads, and runs the model on the Android device. The model continues to run even if the device is disconnected from the computer.

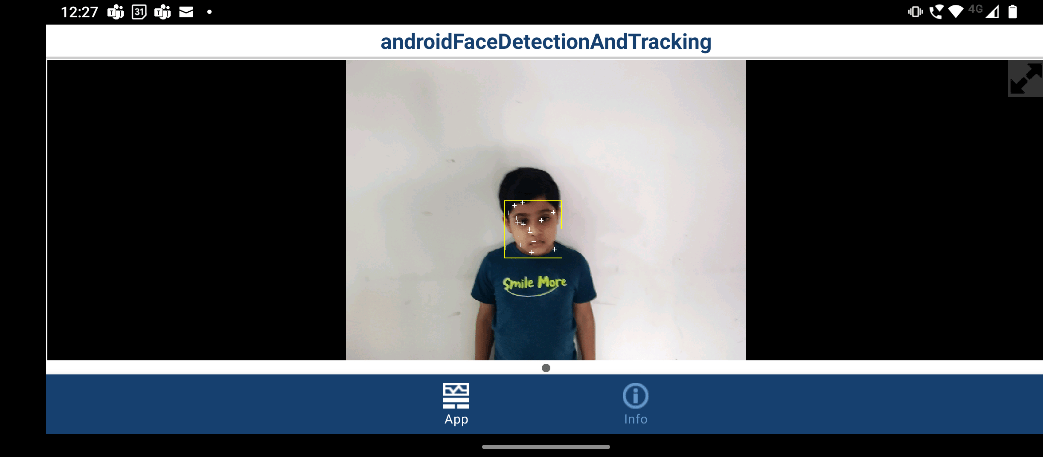

2. Capture a face using the back camera of the device. The application detects the face with feature points as shown.

When the person tilts the head, or moves toward or away from the camera, the application tracks the face as shown.

![]()

References

Viola, Paul A., and Michael J. Jones. "Rapid Object Detection using a Boosted Cascade of Simple Features", IEEE CVPR, 2001.

Lucas, Bruce D., and Takeo Kanade. "An Iterative Image Registration Technique with an Application to Stereo Vision." International Joint Conference on Artificial Intelligence, 1981.

Lucas, Bruce D., and Takeo Kanade. "Detection and Tracking of Point Features." Carnegie Mellon University Technical Report CMU-CS-91-132, 1991.

Shi, Jianbo, and Carlo Tomasi. "Good Features to Track." IEEE Conference on Computer Vision and Pattern Recognition, 1994.

ZKalal, Zdenek, Krystian Mikolajczyk, and Jiri Matas. "Forward-Backward Error: Automatic Detection of Tracking Failures." International Conference on Pattern Recognition, 2010

See Also

Recognize Handwritten Digits Using MNIST Data Set on Android Device