Restricted Boltzmann Machine

Restricted Boltzmann machines (RBMs) are the first neural networks used for unsupervised learning, created by Geoff Hinton (university of Toronto).

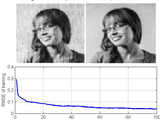

The aim of RBMs is to find patterns in data by reconstructing the inputs using only two layers (the visible layer and the hidden layer). By moving forward an RBM translates the visible layer into a set of numbers that encodes the inputs, in backward pass it takes those set of numbers and translates them to the visible layer to regenerate the inputs.

In this code we introduce to you very simple algorithms that depend on contrastive divergence training. The details of this method are explained step by step in the comments inside the code.

To learn about RBM you can start from these referances:

[1] G. Hinton and G. Hinton, “A Practical Guide to Training Restricted Boltzmann Machines A Practical Guide to Training Restricted Boltzmann Machines,” 2010.

how To use the codes:

https://www.youtube.com/watch?v=uaVfyeE3Jwk&feature=youtu.be

Citar como

BERGHOUT Tarek (2026). Restricted Boltzmann Machine (https://es.mathworks.com/matlabcentral/fileexchange/71212-restricted-boltzmann-machine), MATLAB Central File Exchange. Recuperado .

Compatibilidad con la versión de MATLAB

Compatibilidad con las plataformas

Windows macOS LinuxCategorías

Etiquetas

Descubra Live Editor

Cree scripts con código, salida y texto formateado en un documento ejecutable.

RBM_new

RBM_new/RBM

| Versión | Publicado | Notas de la versión | |

|---|---|---|---|

| 3.1.0 | discription |

||

| 3.0.0 | description |

||

| 2.0.0 | new version |

||

| 1.5.0 | referances |

|

|

| 1.4.0 | In the last version we made a mistake: instead of giving all the samples of the image to visible neurons we used only one sample in each Gibbs sampling; now it is corrected. |

|

|

| 1.3.0 | new descriptif image |

|

|

| 1.2.0 | in the last code we trained by mistake the RBM with scalar units in visible and hidden layers, as we change the representation of these units into binary units during training and we'v got a much more improvements in accurcy |

||

| 1.0.0 |

|