CIE XYZ NET

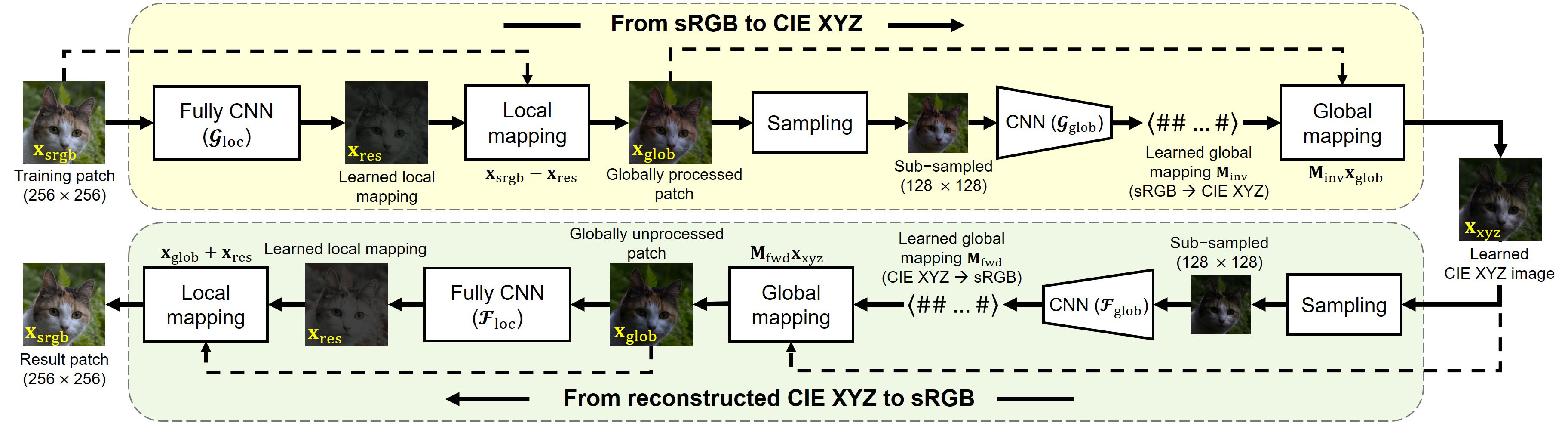

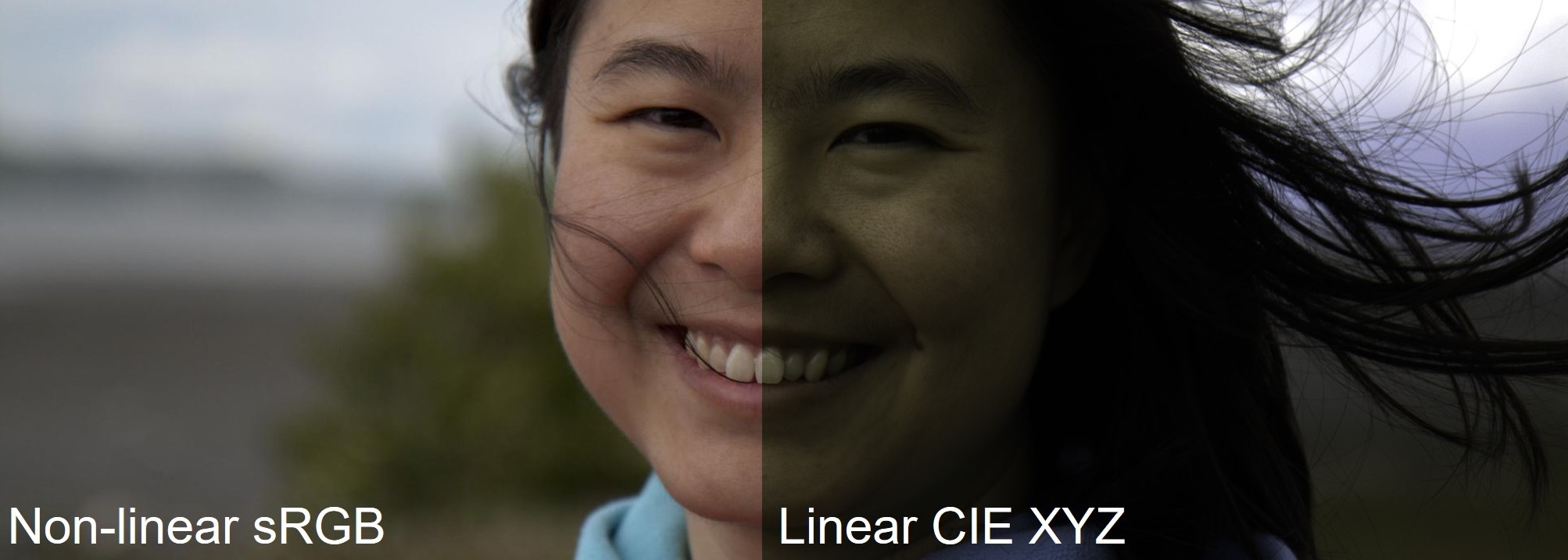

CIE XYZ Net: Unprocessing Images for Low-Level Computer Vision Tasks

Mahmoud Afifi, Abdelrahman Abdelhamed, Abdullah Abuolaim, Abhijith Punnappurath, and Michael S. Brown

York University

Reference code for the paper CIE XYZ Net: Unprocessing Images for Low-Level Computer Vision Tasks. Mahmoud Afifi, Abdelrahman Abdelhamed, Abdullah Abuolaim, Abhijith Punnappurath, and Michael S. Brown, IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021. If you use this code or our dataset, please cite our paper:

@article{CIEXYZNet,

title={CIE XYZ Net: Unprocessing Images for Low-Level Computer Vision Tasks},

author={Afifi, Mahmoud and Abdelhamed, Abdelrahman and Abuolaim, Abdullah and Punnappurath, Abhijith and Brown, Michael S},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

pages={},

year={2021}

}

Code (MIT License)

Prerequisite

- Python 3.6

- opencv-python

- pytorch (tested with 1.5.0)

- torchvision (tested with 0.6.0)

- cudatoolkit

- tensorboard (optional)

- numpy

- future

- tqdm

- matplotlib

The code may work with library versions other than the specified.

Get Started

Demos:

- Run

demo_single_image.pyordemo_images.pyto convert from sRGB to XYZ and back. You can change the task to run only one of the inverse or forward networks. - Run

demo_single_image_with_operators.pyordemo_images_with_operators.pyto apply an operator(s) to the intermediate layers/images. The operator code should be located in thepp_codedirectory. You should change the code inpp_code/postprocessing.pywith your operator code.

Training Code:

Run train.py to re-train our network. You will need to adjust the training/validation directories accordingly.

Note:

All experiments in the paper were reported using the Matlab version of CIE XYZ Net. The PyTorch code/model is provided to facilitate using our framework with PyTorch, but there is no guarantee that the Torch version gives exactly the same reconstruction/rendering results reported in the paper.

Prerequisite

- Matlab 2019b or higher

- Deep Learning Toolbox

Get Started

Run install_.m.

Demos:

- Run

demo_single_image.mordemo_images.mto convert from sRGB to XYZ and back. You can change the task to run only one of the inverse or forward networks. - Run

demo_single_image_with_operators.mordemo_images_with_operators.mto apply an operator(s) to the intermediate layers/images. The operator code should be located in thepp_codedirectory. You should change the code inpp_code/postprocessing.mwith your operator code.

Training Code:

Run training.m to re-train our network. You will need to adjust the training/validation directories accordingly.

sRGB2XYZ Dataset

Our sRGB2XYZ dataset contains ~1,200 pairs of camera-rendered sRGB and the corresponding scene-referred CIE XYZ images (971 training, 50 validation, and 244 testing images).

Training set (11.1 GB): Part 0 | Part 1 | Part 2 | Part 3 | Part 4 | Part 5

Validation set (570 MB): Part 0

Testing set (2.83 GB): Part 0 | Part 1

Dataset License:

As the dataset was originally rendered using raw images taken from the MIT-Adobe FiveK dataset, our sRGB2XYZ dataset follows the original license of the MIT-Adobe FiveK dataset.

Citar como

Mahmoud Afifi, Abdelrahman Abdelhamed, Abdullah Abuolaim, Abhijith Punnappurath, and Michael S. Brown. CIE XYZ Net: Unprocessing Images for Low-Level Computer Vision Tasks. arXiv preprint arXiv:2006.12709, 2020.

Compatibilidad con la versión de MATLAB

Compatibilidad con las plataformas

Windows macOS LinuxEtiquetas

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Descubra Live Editor

Cree scripts con código, salida y texto formateado en un documento ejecutable.

Matlab

Matlab/pp_code

Matlab/src

No se pueden descargar versiones que utilicen la rama predeterminada de GitHub

| Versión | Publicado | Notas de la versión | |

|---|---|---|---|

| 1.0.1 | . |

|

|

| 1.0.0 |

|