Image Category Classification by Using Deep Learning

This example shows you how to create, compile, and deploy a dlhdl.Workflow object with ResNet-18 as the network object by using the Deep Learning HDL Toolbox™ Support Package for Xilinx® FPGA and SoC. Use MATLAB® to retrieve the prediction results from the target device. ResNet-18 is a pretrained convolutional neural network that has been trained on over a million images and can classify images into 1000 object categories (such as keyboard, coffee, mug, pencil, and many animals). You can also use VGG-19 and DarkNet-19 as the network objects.

Prerequisites

Xilinx ZCU102 SoC Development Kit

Load the Pretrained Network

To load the pretrained Directed Acyclic Graph (DAG) network resnet18, enter:

[net,classNames] = imagePretrainedNetwork('resnet18');To load the pretrained series network vgg19, enter:

% [net,classNames] = imagePretrainedNetwork('vgg19');To load the pretrained series network darknet19, enter:

% [net,classNames] = imagePretrainedNetwork('darknet19');The pretrained ResNet-18 network contains 71 layers including the input, convolution, batch normalization, ReLU, max pooling, addition, global average pooling, fully connected, and the softmax layers. To view the layers of the pretrained ResNet-18 network, enter:

analyzeNetwork(net)

Create Target Object

Use the dlhdl.Target class to create a target object with a custom name for your target device and an interface to connect your target device to the host computer. Interface options are JTAG and Ethernet. To use JTAG, install Xilinx Vivado® Design Suite 2022.1. To set the Xilinx Vivado toolpath, enter:

hdlsetuptoolpath('ToolName', 'Xilinx Vivado', 'ToolPath', 'C:\Xilinx\Vivado\2022.1\bin\vivado.bat');

hTarget = dlhdl.Target('Xilinx', 'Interface', 'Ethernet');

Create WorkFlow Object

Use the dlhdl.Workflow class to create an object. When you create the object, specify the network and the bitstream name. Specify the saved pretrained ResNet-18 neural network as the network. Make sure that the bitstream name matches the data type and the FPGA board that you are targeting. In this example, the target FPGA board is the Xilinx ZCU102 SoC board. The bitstream uses a single data type.

hW = dlhdl.Workflow('Network', net, 'Bitstream', 'zcu102_single', 'Target', hTarget);

Compile the ResNet-18 DAG Network

To compile the ResNet-18 DAG network, run the compile method of the dlhdl.Workflow object. You can optionally specify the maximum number of input frames. You can also optionally specify the input image normalization to happen in software.

compile(hW, 'InputFrameNumberLimit', 15, 'HardwareNormalization', 'off')

### Compiling network for Deep Learning FPGA prototyping ...

### Targeting FPGA bitstream zcu102_single.

### An output layer called 'Output1_prob' of type 'nnet.cnn.layer.RegressionOutputLayer' has been added to the provided network. This layer performs no operation during prediction and thus does not affect the output of the network.

### Optimizing network: Fused 'nnet.cnn.layer.BatchNormalizationLayer' into 'nnet.cnn.layer.Convolution2DLayer'

### The network includes the following layers:

1 'data' Image Input 224×224×3 images with 'zscore' normalization (SW Layer)

2 'conv1' 2-D Convolution 64 7×7×3 convolutions with stride [2 2] and padding [3 3 3 3] (HW Layer)

3 'conv1_relu' ReLU ReLU (HW Layer)

4 'pool1' 2-D Max Pooling 3×3 max pooling with stride [2 2] and padding [1 1 1 1] (HW Layer)

5 'res2a_branch2a' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

6 'res2a_branch2a_relu' ReLU ReLU (HW Layer)

7 'res2a_branch2b' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

8 'res2a' Addition Element-wise addition of 2 inputs (HW Layer)

9 'res2a_relu' ReLU ReLU (HW Layer)

10 'res2b_branch2a' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

11 'res2b_branch2a_relu' ReLU ReLU (HW Layer)

12 'res2b_branch2b' 2-D Convolution 64 3×3×64 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

13 'res2b' Addition Element-wise addition of 2 inputs (HW Layer)

14 'res2b_relu' ReLU ReLU (HW Layer)

15 'res3a_branch2a' 2-D Convolution 128 3×3×64 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

16 'res3a_branch2a_relu' ReLU ReLU (HW Layer)

17 'res3a_branch2b' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

18 'res3a_branch1' 2-D Convolution 128 1×1×64 convolutions with stride [2 2] and padding [0 0 0 0] (HW Layer)

19 'res3a' Addition Element-wise addition of 2 inputs (HW Layer)

20 'res3a_relu' ReLU ReLU (HW Layer)

21 'res3b_branch2a' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

22 'res3b_branch2a_relu' ReLU ReLU (HW Layer)

23 'res3b_branch2b' 2-D Convolution 128 3×3×128 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

24 'res3b' Addition Element-wise addition of 2 inputs (HW Layer)

25 'res3b_relu' ReLU ReLU (HW Layer)

26 'res4a_branch2a' 2-D Convolution 256 3×3×128 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

27 'res4a_branch2a_relu' ReLU ReLU (HW Layer)

28 'res4a_branch2b' 2-D Convolution 256 3×3×256 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

29 'res4a_branch1' 2-D Convolution 256 1×1×128 convolutions with stride [2 2] and padding [0 0 0 0] (HW Layer)

30 'res4a' Addition Element-wise addition of 2 inputs (HW Layer)

31 'res4a_relu' ReLU ReLU (HW Layer)

32 'res4b_branch2a' 2-D Convolution 256 3×3×256 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

33 'res4b_branch2a_relu' ReLU ReLU (HW Layer)

34 'res4b_branch2b' 2-D Convolution 256 3×3×256 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

35 'res4b' Addition Element-wise addition of 2 inputs (HW Layer)

36 'res4b_relu' ReLU ReLU (HW Layer)

37 'res5a_branch2a' 2-D Convolution 512 3×3×256 convolutions with stride [2 2] and padding [1 1 1 1] (HW Layer)

38 'res5a_branch2a_relu' ReLU ReLU (HW Layer)

39 'res5a_branch2b' 2-D Convolution 512 3×3×512 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

40 'res5a_branch1' 2-D Convolution 512 1×1×256 convolutions with stride [2 2] and padding [0 0 0 0] (HW Layer)

41 'res5a' Addition Element-wise addition of 2 inputs (HW Layer)

42 'res5a_relu' ReLU ReLU (HW Layer)

43 'res5b_branch2a' 2-D Convolution 512 3×3×512 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

44 'res5b_branch2a_relu' ReLU ReLU (HW Layer)

45 'res5b_branch2b' 2-D Convolution 512 3×3×512 convolutions with stride [1 1] and padding [1 1 1 1] (HW Layer)

46 'res5b' Addition Element-wise addition of 2 inputs (HW Layer)

47 'res5b_relu' ReLU ReLU (HW Layer)

48 'pool5' 2-D Global Average Pooling 2-D global average pooling (HW Layer)

49 'fc1000' Fully Connected 1000 fully connected layer (HW Layer)

50 'prob' Softmax softmax (SW Layer)

51 'Output1_prob' Regression Output mean-squared-error (SW Layer)

### Notice: The layer 'data' with type 'nnet.cnn.layer.ImageInputLayer' is implemented in software.

### Notice: The layer 'prob' with type 'nnet.cnn.layer.SoftmaxLayer' is implemented in software.

### Notice: The layer 'Output1_prob' with type 'nnet.cnn.layer.RegressionOutputLayer' is implemented in software.

### Compiling layer group: conv1>>pool1 ...

### Compiling layer group: conv1>>pool1 ... complete.

### Compiling layer group: res2a_branch2a>>res2a_branch2b ...

### Compiling layer group: res2a_branch2a>>res2a_branch2b ... complete.

### Compiling layer group: res2b_branch2a>>res2b_branch2b ...

### Compiling layer group: res2b_branch2a>>res2b_branch2b ... complete.

### Compiling layer group: res3a_branch1 ...

### Compiling layer group: res3a_branch1 ... complete.

### Compiling layer group: res3a_branch2a>>res3a_branch2b ...

### Compiling layer group: res3a_branch2a>>res3a_branch2b ... complete.

### Compiling layer group: res3b_branch2a>>res3b_branch2b ...

### Compiling layer group: res3b_branch2a>>res3b_branch2b ... complete.

### Compiling layer group: res4a_branch1 ...

### Compiling layer group: res4a_branch1 ... complete.

### Compiling layer group: res4a_branch2a>>res4a_branch2b ...

### Compiling layer group: res4a_branch2a>>res4a_branch2b ... complete.

### Compiling layer group: res4b_branch2a>>res4b_branch2b ...

### Compiling layer group: res4b_branch2a>>res4b_branch2b ... complete.

### Compiling layer group: res5a_branch1 ...

### Compiling layer group: res5a_branch1 ... complete.

### Compiling layer group: res5a_branch2a>>res5a_branch2b ...

### Compiling layer group: res5a_branch2a>>res5a_branch2b ... complete.

### Compiling layer group: res5b_branch2a>>res5b_branch2b ...

### Compiling layer group: res5b_branch2a>>res5b_branch2b ... complete.

### Compiling layer group: pool5 ...

### Compiling layer group: pool5 ... complete.

### Compiling layer group: fc1000 ...

### Compiling layer group: fc1000 ... complete.

### Allocating external memory buffers:

offset_name offset_address allocated_space

_______________________ ______________ ________________

"InputDataOffset" "0x00000000" "11.5 MB"

"OutputResultOffset" "0x00b7c000" "60.0 kB"

"SchedulerDataOffset" "0x00b8b000" "2.4 MB"

"SystemBufferOffset" "0x00df1000" "6.1 MB"

"InstructionDataOffset" "0x01416000" "2.4 MB"

"ConvWeightDataOffset" "0x0167a000" "49.5 MB"

"FCWeightDataOffset" "0x047f2000" "2.0 MB"

"EndOffset" "0x049e7000" "Total: 73.9 MB"

### Network compilation complete.

dn = struct with fields:

weights: [1×1 struct]

instructions: [1×1 struct]

registers: [1×1 struct]

syncInstructions: [1×1 struct]

constantData: {}

ddrInfo: [1×1 struct]

resourceTable: [6×2 table]

Program Bitstream onto FPGA and Download Network Weights

To deploy the network on the Xilinx ZCU102 hardware, run the deploy function of the dlhdl.Workflow object. This function uses the output of the compile function to program the FPGA board by using the programming file. It also downloads the network weights and biases. The deploy function starts programming the FPGA device, displays progress messages, and the time it takes to deploy the network.

deploy(hW)

### Programming FPGA Bitstream using Ethernet... ### Attempting to connect to the hardware board at 172.21.59.90... ### Connection successful ### Programming FPGA device on Xilinx SoC hardware board at 172.21.59.90... ### Attempting to connect to the hardware board at 172.21.59.90... ### Connection successful ### Copying FPGA programming files to SD card... ### Setting FPGA bitstream and devicetree for boot... # Copying Bitstream zcu102_single.bit to /mnt/hdlcoder_rd # Set Bitstream to hdlcoder_rd/zcu102_single.bit # Copying Devicetree devicetree_dlhdl.dtb to /mnt/hdlcoder_rd # Set Devicetree to hdlcoder_rd/devicetree_dlhdl.dtb # Set up boot for Reference Design: 'AXI-Stream DDR Memory Access : 3-AXIM' ### Programming done. The system will now reboot for persistent changes to take effect. ### Rebooting Xilinx SoC at 172.21.59.90... ### Reboot may take several seconds... ### Attempting to connect to the hardware board at 172.21.59.90... ### Connection successful ### Programming the FPGA bitstream has been completed successfully. ### Loading weights to Conv Processor. ### Conv Weights loaded. Current time is 15-Jul-2024 08:32:39 ### Loading weights to FC Processor. ### 50% finished, current time is 15-Jul-2024 08:32:39. ### FC Weights loaded. Current time is 15-Jul-2024 08:32:40

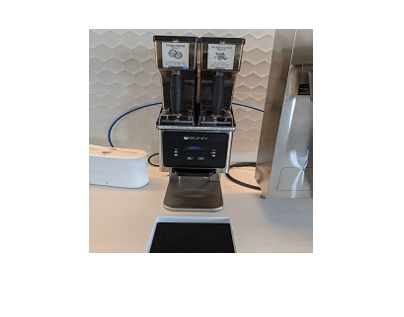

Load Image for Prediction

Load the example image.

imgFile = 'book.jpg';

inputImg = imresize(imread(imgFile), [224,224]);

imshow(inputImg)

Run Prediction for One Image

Execute the predict method on the dlhdl.Workflow object and then show the label in the MATLAB command window.

inputDLArray = dlarray(single(inputImg),'SSCB'); [prediction, speed] = predict(hW,inputDLArray,'Profile','on');

### Finished writing input activations.

### Running single input activation.

Deep Learning Processor Profiler Performance Results

LastFrameLatency(cycles) LastFrameLatency(seconds) FramesNum Total Latency Frames/s

------------- ------------- --------- --------- ---------

Network 24702729 0.11229 1 24705200 8.9

conv1 2226736 0.01012

pool1 505414 0.00230

res2a_branch2a 974493 0.00443

res2a_branch2b 974112 0.00443

res2a 373972 0.00170

res2b_branch2a 974239 0.00443

res2b_branch2b 974266 0.00443

res2b 373862 0.00170

res3a_branch1 539290 0.00245

res3a_branch2a 542091 0.00246

res3a_branch2b 909615 0.00413

res3a 187055 0.00085

res3b_branch2a 909767 0.00414

res3b_branch2b 910024 0.00414

res3b 186925 0.00085

res4a_branch1 491263 0.00223

res4a_branch2a 494696 0.00225

res4a_branch2b 894219 0.00406

res4a 93654 0.00043

res4b_branch2a 894119 0.00406

res4b_branch2b 894076 0.00406

res4b 93554 0.00043

res5a_branch1 1131916 0.00515

res5a_branch2a 1134581 0.00516

res5a_branch2b 2211568 0.01005

res5a 46662 0.00021

res5b_branch2a 2211536 0.01005

res5b_branch2b 2212127 0.01006

res5b 46902 0.00021

pool5 73829 0.00034

fc1000 215979 0.00098

* The clock frequency of the DL processor is: 220MHz

[val, idx] = max(prediction); classNames(idx)

ans = "binder"

Run Prediction for Multiple Images

Load multiple images and retrieve their prediction results by using the multiple frame support feature. For more information, see Multiple Frame Support.

The demoOnImage function loads multiple images and retrieves their prediction results. The annotateresults function displays the image prediction result on top of the images which are assembled into a 3-by-5 array.

demoOnImage;

See Also

dlhdl.Workflow | dlhdl.Target | compile | deploy | predict | Deep Network Designer