Deep Network Quantizer

Quantize deep neural network to 8-bit scaled integer data types

Description

Use the Deep Network Quantizer app to reduce the memory requirement of a deep neural network by quantizing weights, biases, and activations of layers to 8-bit scaled integer data types. Using this app, you can:

Visualize the dynamic ranges of convolution layers in a deep neural network.

Select individual network layers to quantize.

Assess the performance of a quantized network.

Generate GPU code to deploy the quantized network using GPU Coder™.

Generate HDL code to deploy the quantized network to an FPGA using Deep Learning HDL Toolbox™.

Generate C++ code to deploy the quantized network to an ARM Cortex-A microcontroller using MATLAB® Coder™.

Generate a simulatable quantized network that you can explore in MATLAB without generating code or deploying to hardware.

This app requires the Deep Learning Toolbox Model Compression Library. To learn about the products required to quantize a deep neural network, see Quantization Workflow Prerequisites.

Open the Deep Network Quantizer App

MATLAB command prompt: Enter

deepNetworkQuantizer.MATLAB toolstrip: On the Apps tab, under Machine Learning and Deep Learning, click the app icon.

Examples

To explore the behavior of a neural network with quantized layers, use the Deep Network Quantizer app. This example quantizes the learnable parameters and activations of layers in the squeezenet neural network after retraining the network to classify new images.

This example uses a dlnetwork with the GPU execution environment.

Load the network to quantize into the base workspace.

load squeezedlnetmerch

netnet =

dlnetwork with properties:

Layers: [67×1 nnet.cnn.layer.Layer]

Connections: [74×2 table]

Learnables: [52×3 table]

State: [0×3 table]

InputNames: {'data'}

OutputNames: {'prob'}

Initialized: 1

View summary with summary.

Define calibration and validation data.

The app uses calibration data to exercise the network and collect the dynamic ranges of the weights, biases. and activations for layers of the network. For the best quantization results, the calibration data must be representative of inputs to the network.

The app uses the validation data to test the network after quantization to understand the effects of the limited range and precision of the quantized learnable parameters of the layers in the network.

In this example, use the images in the MerchData data set. Split the data into calibration and validation data sets.

unzip('MerchData.zip'); imds = imageDatastore('MerchData', ... 'IncludeSubfolders',true, ... 'LabelSource','foldernames'); [calData, valData] = splitEachLabel(imds,0.7,'randomized');

At the MATLAB command prompt, open the app.

deepNetworkQuantizer

In the app, click New and select Quantize a network.

In the dialog, select the execution environment and the network to quantize from the base workspace. For this example, select a GPU execution environment and net - dlnetwork. For more information on selecting an execution environment, see Quantization of Deep Neural Networks.

Select the Prepare network for quantization option. Network preparation modifies your neural network to improve performance and avoid error conditions in the quantization workflow. For more information, see prepareNetwork.

Click OK. The app displays the layer graph of the selected network.

In the Calibrate section of the toolstrip, under Calibration Data, select the ImageDatastore object from the base workspace containing the calibration data, calData. Click Calibrate.

The Deep Network Quantizer uses the calibration data to exercise the network and collect range information for the learnable parameters in the network layers.

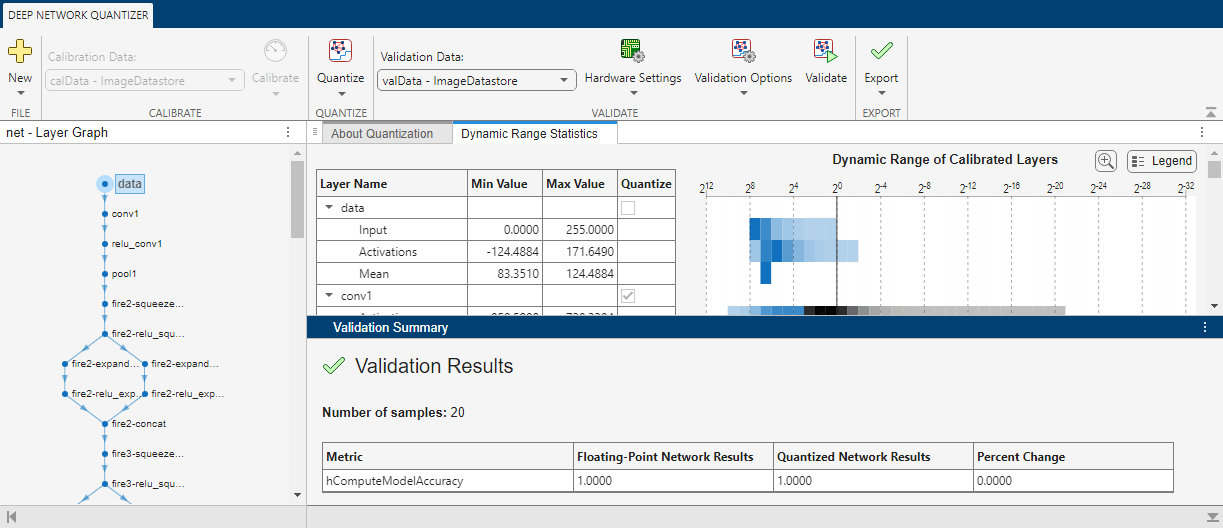

When the calibration is complete, the app displays a table containing the weights, biases, and activations for layers of the network and their minimum and maximum values during the calibration. To the right of the table, the app displays histograms of the dynamic ranges of the parameters.

In the Quantize section of the toolstrip, under Quantize, select the MinMax exponent scheme. Click Quantize.

The Deep Network Quantizer quantizes the weights, activations, and biases of layers in the network to scaled 8-bit integer data types. The app updates the histogram of the dynamic ranges of the parameters. The gray regions of the histograms indicate data that cannot be represented by the quantized representation. For more information on how to interpret these histograms, see Data Types and Scaling for Quantization of Deep Neural Networks.

In the Quantize column of the table, indicate whether to quantize the learnable parameters in the layer. Layers that are not quantized remain in single precision after quantization.

In the Validate section of the toolstrip, under Validation Data, select the ImageDatastore object from the base workspace containing the validation data, valData.

Define a custom metric function, hComputeModelAccuracy. Save this custom metric function in a local file.

function accuracy = hComputeModelAccuracy(predictionScores, ~, dataStore) %% Computes model-level accuracy statistics classNames = categories(dataStore.Labels); predictedLabels = scores2label(predictionScores,classNames); accuracy = mean(squeeze(predictedLabels) == dataStore.Labels); end

In the Validate section of the toolstrip, under Validation Options, enter the name of the custom metric function, hComputeModelAccuracy. Select Add to add hComputeModelAccuracy to the list of metric functions available in the app. Select hComputeModelAccuracy as the metric function to use.

The custom metric function must be on the path. If the metric function is not on the path, this step causes an error.

Click Validate. The app uses the validation data to exercise the network.

When the validation is complete, the app displays the results of the validation, including:

Metric function used for validation

Result of the metric function before and after quantization

Memory requirement of the network before and after quantization (MB)

The app displays only scalar values in the validation results table. To view the validation results for a custom metric function with nonscalar output, export the dlquantizer object as described below, then validate the dlquantizer object using the validate function in the MATLAB command window.

If the performance of the quantized network is not satisfactory, you can choose to not quantize some layers by clearing the layer in the table. You can also explore the effects of choosing a different exponent selection scheme for quantization in the Quantize menu. To see the effects of these changes, quantize and validate the network again.

After calibrating and quantizing the network, you can choose to export the quantized network or the dlquantizer object. Select the Export button. In the drop-down list, select from the following options:

Export Quantized Network — Add the quantized network to the base workspace. This option exports a simulatable quantized network that you can explore in MATLAB without deploying to hardware.

Export Quantizer — Add the

dlquantizerobject to the base workspace. You can save thedlquantizerobject and use it for further exploration in the Deep Network Quantizer app or at the command line, or use it to generate code for your target hardware.Generate Code — Open the GPU Coder app and generate GPU code from the quantized neural network. Generating GPU code requires a GPU Coder™ license.

This example uses:

- Deep Learning ToolboxDeep Learning Toolbox

- MATLAB CoderMATLAB Coder

- Embedded CoderEmbedded Coder

- Deep Learning Toolbox Model Compression LibraryDeep Learning Toolbox Model Compression Library

- MATLAB Support Package for Raspberry Pi HardwareMATLAB Support Package for Raspberry Pi Hardware

- MATLAB Coder Interface for Deep LearningMATLAB Coder Interface for Deep Learning

To explore the behavior of a neural network with quantized convolution layers, use the Deep Network Quantizer app. This example quantizes the learnable parameters and activations of layers in the squeezenet neural network after retraining the network to classify new images.

This example uses a dlnetwork with the CPU execution environment.

Load the network to quantize into the base workspace.

load squeezedlnetmerch

netnet =

dlnetwork with properties:

Layers: [67×1 nnet.cnn.layer.Layer]

Connections: [74×2 table]

Learnables: [52×3 table]

State: [0×3 table]

InputNames: {'data'}

OutputNames: {'prob'}

Initialized: 1

View summary with summary.

Define calibration and validation data.

The app uses calibration data to exercise the network and collect the dynamic ranges of the weights, biases. and activations for layers of the network. For the best quantization results, the calibration data must be representative of inputs to the network.

The app uses the validation data to test the network after quantization to understand the effects of the limited range and precision of the quantized learnable parameters of the layers in the network.

In this example, use the images in the MerchData data set. Split the data into calibration and validation data sets.

unzip('MerchData.zip'); imds = imageDatastore('MerchData', ... 'IncludeSubfolders',true, ... 'LabelSource','foldernames'); [calData, valData] = splitEachLabel(imds, 0.7, 'randomized');

At the MATLAB command prompt, open the app.

deepNetworkQuantizer

In the app, click New and select Quantize a network.

In the dialog, select the execution environment and the network to quantize from the base workspace. For this example, select a CPU execution environment and net - dlnetwork. For more information on selecting an execution environment, see Quantization of Deep Neural Networks.

Select the Prepare network for quantization option. Network preparation modifies your neural network to improve performance and avoid error conditions in the quantization workflow. For more information, see prepareNetwork.

Click OK. The app displays the layer graph of the selected network.

In the Calibrate section of the toolstrip, under Calibration Data, select the ImageDatastore object from the base workspace containing the calibration data, calData. Select Calibrate.

The Deep Network Quantizer uses the calibration data to exercise the network and collect range information for the learnable parameters in the network layers.

When the calibration is complete, the app displays a table containing the weights, biases, and activations for layers of the network and their minimum and maximum values during the calibration. To the right of the table, the app displays histograms of the dynamic ranges of the parameters.

In the Quantize section of the toolstrip, under Quantize, select the MinMax exponent scheme. Click Quantize.

The Deep Network Quantizer quantizes the weights, activations, and biases of layers in the network to scaled 8-bit integer data types. The app updates the histogram of the dynamic ranges of the parameters. The gray regions of the histograms indicate data that cannot be represented by the quantized representation. For more information on how to interpret these histograms, see Data Types and Scaling for Quantization of Deep Neural Networks.

In the Quantize column of the table, indicate whether to quantize the learnable parameters in the layer. Layers that are not quantized remain in single precision after quantization.

In the Validate section of the toolstrip, under Validation Data, select the ImageDatastore object from the base workspace containing the validation data, valData.

In the Validate section of the toolstrip, under Hardware Settings, select Raspberry Pi as the Simulation Environment. The app auto-populates the Target credentials from an existing connection or from the last successful connection. You can also use this option to create a new Raspberry Pi connection.

Define a custom metric function, hComputeModelAccuracy. Save this custom metric function in a local file.

function accuracy = hComputeModelAccuracy(predictionScores, ~, dataStore) %% Computes model-level accuracy statistics classNames = categories(dataStore.Labels); predictedLabels = scores2label(predictionScores,classNames); accuracy = mean(squeeze(predictedLabels) == dataStore.Labels); end

In the Validate section of the toolstrip, under Validation Options, enter the name of the custom metric function, hComputeModelAccuracy. Select Add to add hComputeModelAccuracy to the list of metric functions available in the app. Select hComputeModelAccuracy as the metric function to use.

The custom metric function must be on the path. If the metric function is not on the path, this step causes an error.

Click Validate. The app uses the validation data to exercise the network.

When the validation is complete, the app displays the results of the validation, including:

Metric function used for validation

Result of the metric function before and after quantization

Memory requirement of the network before and after quantization (MB)

If the performance of the quantized network is not satisfactory, you can explore the effects of choosing a different exponent selection scheme for quantization in the Quantize drop-down list. To see the effects of these changes, quantize and validate the network again.

After calibrating and quantizing the network, you can choose to export the quantized network or the dlquantizer object. Select the Export button. In the drop down, select from the following options:

Export Quantized Network — Add the quantized network to the base workspace. This option exports a simulatable quantized network that you can explore in MATLAB without deploying to hardware.

Export Quantizer — Add the

dlquantizerobject to the base workspace. You can save thedlquantizerobject and use it for further exploration in the Deep Network Quantizer app or at the command line, or use it to generate code for your target hardware.Generate Code — Open the MATLAB Coder app and generate C++ code from the quantized neural network. Generating C++ code requires a MATLAB Coder™ license.

Import a dlquantizer object from the base workspace into the

Deep Network Quantizer app to begin quantization of a deep neural network

using either the command line or the app, and resume your work later in the app.

Open the Deep Network Quantizer app.

deepNetworkQuantizer

In the app, click New and select Import

dlquantizer object.

In the dialog, select a dlquantizer object to import from the

base workspace. For this example, use the dlquantizer object

quantizer from the above example Quantize a Neural Network

for GPU Target. You can create the quantizer object by selecting

Export Quantizer from the Export

drop-down list after quantizing the network.

The app imports any data contained in the dlquantizer object that

was collected at the command line, including the quantized network, calibration

data, validation data, and calibration statistics.

The app displays a table containing the quantization data contained in the

imported dlquantizer object, quantizer. To the

right of the table, the app displays histograms of the dynamic ranges of the

parameters. The gray regions of the histograms indicate data that cannot be

represented by the quantized representation. For more information on how to

interpret these histograms, see Quantization of Deep Neural Networks.

Related Examples

Parameters

When you select New > Quantize a Network, the app allows you to choose the execution environment for the quantized network. How the network is quantized depends on the choice of execution environment.

When you select the MATLAB execution environment, the app

performs target-agnostic quantization of the neural network. You do not need to have the

target hardware to explore the quantized network in MATLAB.

When you select Network Preparation, your neural network is modified to improve

performance and avoid error conditions. For more information, see prepareNetwork.

Specify hardware settings based on your execution environment.

GPU Execution Environment

Select from the following simulation environments:

Simulation Environment Action GPU

Simulate on host GPU

Deploys the quantized network to the host GPU. Validates the quantized network by comparing performance to single-precision version of the network.

MATLAB

Simulate in MATLAB

Simulates the quantized network in MATLAB. Validates the quantized network by comparing performance to single-precision version of the network.

FPGA Execution Environment

Select from the following simulation environments:

Simulation Environment Action Cosimulation

Emulate on host

Emulates the quantized network in MATLAB. Validates the quantized network by comparing performance to single-precision version of the network. Intel Arria 10 SoC

arria10soc_int8

Deploys the quantized network to an Intel® Arria® 10 SoC board by using the

arria10soc_int8bitstream. Validates the quantized network by comparing performance to single-precision version of the network.Xilinx ZCU102

zcu102_int8

Deploys the quantized network to a Xilinx® Zynq® UltraScale+™ MPSoC ZCU102 10 SoC board by using the

zcu102_int8bitstream. Validates the quantized network by comparing performance to single-precision version of the network.Xilinx ZC706

zc706_int8

Deploys the quantized network to a Xilinx Zynq-7000 ZC706 board by using the

zc706_int8bitstream. Validates the quantized network by comparing performance to single-precision version of the network.Each FPGA board selection uses a default bitstream that is specialized to meet the resource requirements of the board. If you want to specify another bitstream to use for deployment, select Add Custom Bitstream.

When you select the Intel Arria 10 SoC, Xilinx ZCU102, or Xilinx ZC706 option, additionally select the interface to use to deploy and validate the quantized network.

Target Option Action JTAG Programs the target FPGA board selected under Simulation Environment by using a JTAG cable. For more information, see JTAG Connection (Deep Learning HDL Toolbox). Ethernet Programs the target FPGA board selected in Simulation Environment through the Ethernet interface. Specify the IP address for your target board in the IP Address field. CPU Execution Environment

Select from the following simulation environments:

Simulation Environment Action Raspberry Pi

Deploys the quantized network to the Raspberry Pi board. Validates the quantized network by comparing performance to single-precision version of the network.

When you select the Raspberry Pi option, additionally specify the following details for the

raspiconnection.Target Option Description Hostname Hostname of the board, specified as a string. Username Linux® username, specified as a string. Password Linux user password, specified as a string.

Select the exponent selection scheme to use for quantization of the network:

MinMax — Evaluate the exponent based on the range information in the calibration statistics and avoid overflows.

Histogram — Distribution-based scaling which evaluates the exponent to best fit the calibration data.

The Deep Network Quantizer app determines a metric function to use for the validation based on the type of the quantized network.

The default metric functions are supported for DAGNetwork and

SeriesNetwork objects. You must define a custom metric function for a

dlnetwork object. For an example of a custom metric function for a

dlnetwork object, see Quantize Multiple-Input Network Using Image and Feature Data.

| Type of Network | Default Metric Function |

|---|---|

| Classification | Top-1 Accuracy — Accuracy of the network |

| Object detection | Average Precision — Average

precision over all detection results. See |

| Regression | MSE — Mean squared error of the network |

| Semantic segmentation | WeightedIOU — Average IoU of each

class, weighted by the number of pixels in that class. See |

Export Quantized Network — After calibrating the network, quantize and add the quantized network to the base workspace. This option exports a simulatable quantized network,

quantizedNet, that you can explore in MATLAB without deploying to hardware. This option is equivalent to usingquantizeat the command line.Code generation is not supported for the exported quantized network.

Export Quantizer — Add the

dlquantizerobject to the base workspace. You can save thedlquantizerobject and use it for further exploration in the Deep Network Quantizer app or at the command line, or use it to generate code for your target hardware.Generate Code

Execution Environment Code Generation GPU Open the GPU Coder app and generate GPU code from the quantized and validated neural network. Generating GPU code requires a GPU Coder license. CPU Open the MATLAB Coder app and generate C++ code from the quantized and validated neural network. Generating C++ code requires a MATLAB Coder license.

Limitations

Validation on target hardware for CPU, FPGA, and GPU execution environments is not supported in MATLAB Online™. FPGA and GPU execution environments, perform validation through emulation on the MATLAB Online host. You can also perform GPU validation if GPU support has been added to your MATLAB Online Server™ cluster. For more information on GPU support for MATLAB Online, see Configure GPU Support in MATLAB Online Server (MATLAB Online Server).

Version History

Introduced in R2020aWhen you use the MATLAB Execution Environment for quantization, simulation of the network is performed using fixed-point data types. This simulation requires a Fixed-Point Designer™ license for the quantization and validation steps of the deep learning quantization workflow.

You can now validate the quantized network on a Raspberry Pi® board by selecting Raspberry Pi in

Hardware Settings and specifying target credentials for a

raspi object in the validation step.

You can now choose whether to calibrate your network using the host GPU or host CPU. By

default, the calibrate function and the Deep Network

Quantizer app will calibrate on the host GPU if one is available.

In previous versions, it was required that the execution environment be the same as the instrumentation environment used for the calibration step of quantization.

The Deep Network Quantizer app now supports calibration and validation for

dlnetwork objects.

The Deep Network Quantizer app now supports the quantization and validation workflow for CPU targets.

Specify MATLAB as the Execution Environment to

quantize your neural networks without generating code or committing to a specific target for

code deployment. This can be useful if you:

Do not have access to your target hardware.

Want to inspect your quantized network without generating code.

Your quantized network implements int8 data instead of

single data. It keeps the same layers and connections as the original

network, and it has the same inference behavior as it would when running on hardware.

Once you have quantized your network, you can use the

quantizationDetails function to inspect your quantized network.

Additionally, you also have the option to deploy the code to a GPU target.

See Also

Functions

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Seleccione un país/idioma

Seleccione un país/idioma para obtener contenido traducido, si está disponible, y ver eventos y ofertas de productos y servicios locales. Según su ubicación geográfica, recomendamos que seleccione: .

También puede seleccionar uno de estos países/idiomas:

Cómo obtener el mejor rendimiento

Seleccione China (en idioma chino o inglés) para obtener el mejor rendimiento. Los sitios web de otros países no están optimizados para ser accedidos desde su ubicación geográfica.

América

- América Latina (Español)

- Canada (English)

- United States (English)

Europa

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)