Assess Model Validation Findings with Large Language Models

This example shows how to customize Modelscape Review Editor signoff forms to use Large Language Models (LLMs) and other text analytics tools to assess model validation findings. Use these tools to suggest approval outcomes and formulate constructive feedback for model developers. For more general information about customizing signoff forms, see Customization of Signoff Forms in Review Editor.

This example uses the Large Language Models (LLMs) with MATLAB repository and requires you to have an OpenAPI® key. You can also use the forms in this example with other programmatic assessments such as Sentiment Analysis in MATLAB (Text Analytics Toolbox).

Connect Review Editor to LLM Endpoint

1. Clone the Large Language Models (LLMs) with MATLAB repository and add its contents to your MATLAB path.

2. Follow the instructions in the repository for setting up your OpenAI API key.

3. To enable the Review Editor to access your LLM endpoint, configure the network policy of the MATLAB Online Server worker. For details, see Configure Network Policy in Set Up Single MATLAB Configuration (MATLAB Online Server).

Note that you are responsible for any fees OpenAI might charge for using their APIs, and you should be familiar with the limitations and risks associated with using this technology.

Design Your Form

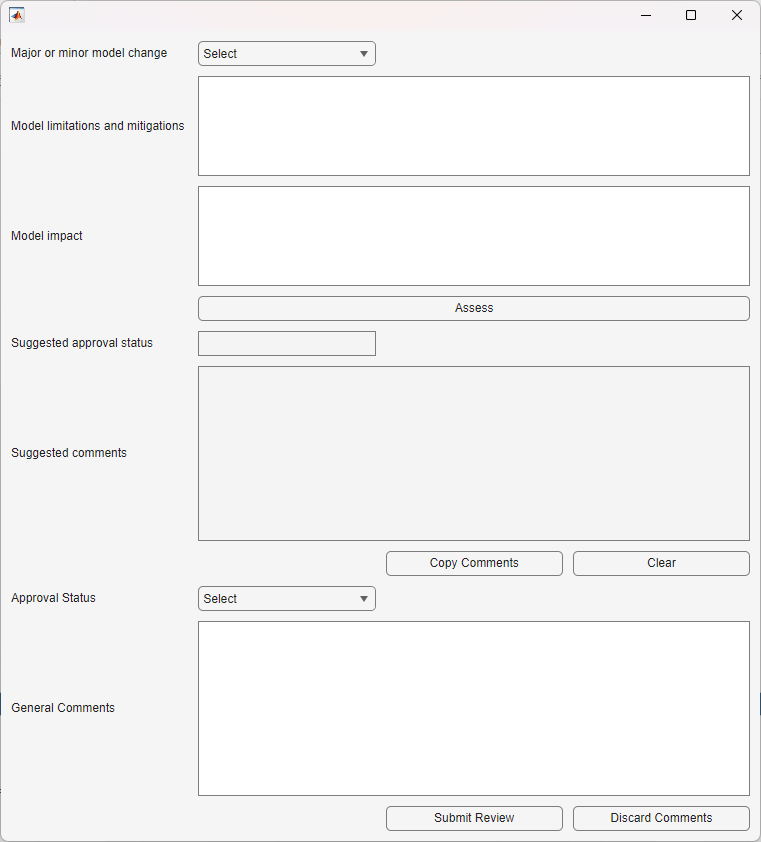

Structure your signoff form as a questionnaire. Use questions that are relevant for the type of models being validated and for the scope of the validation exercise. The form below shows examples of such questions in the context of derivatives pricing model validation.

Use the class template in the Appendix of this example as the starting point for building your LLM assistant.

Modify the setupFormLayout() function to add or change any of the questions or their associated input fields. For example, use drop-downs where the answer can be selected from a list of known options. For more information about customizing forms, see Customization of Signoff Forms in Review Editor.

In this design, clicking the Assess button calls the LLM using the answers to the first three questions. The LLM output is shown in the non-editable Suggested approval and Suggested comments boxes. The validator must actively select the final approval status and fill in the closing comments.

Setup Your LLM Client

Implement the setupAssessor() function in the template class to initialize your chat assistant. For example:

function assessor = setupAssessor(~) systemPrompt = "..."; % provide system prompt assessor = openAIChat(systemPrompt); end

Use the systemPrompt input to guide the behaviour and responses of the LLM, for example by telling it to emulate a "Head of Validation for Pricing Models" in a major investment bank.

Design Your Prompt Templates

Write your prompt as a template to which you can insert your questionnaire answers. Ask for answers to be returned in a specific, structured format, for example as JSON strings representing an object with specific fields. To do this, include the following in your prompt template:

Format the response as a JSON string with exactly three keys "Status", "Comments" and "Questions".

Include any specific criteria you might have for a model to pass, for example by assigning weights to your questionnaire answers.

Finally, include a template for your questionnaire answers in the prompt as follows:

1. **Major or minor model change** %s 2. **Model limitations and mitigations** %s 3. **Model impact** %s

Save the prompt as a text file in the same folder as your form class definition. In the onCallAssessor() function, replace promptTemplate.txt with the name of your file.

Process Your Outputs

Modify the onCallAssessor() function to process your LLM outputs, if necessary. The template class in this example shows how to process a JSON string response with fields Status, Comments and Questions.

Create an Extension Point for Your Custom Form

To create an extension point for your form definition, follow the instructions in Customization of Signoff Forms in Review Editor. Package your extensions.json file, the custom class definition, and the associated prompt template file to a toolbox, along with a copy of the Large Language Models (LLMs) with MATLAB repository.

Deploy this toolbox as part of your Modelscape Review Editor installation.

Appendix: Code Template for LLM-Assisted Signoff Forms

Use the template below to implement the form shown above. Implement the setupAssessor method to initialize your OpenAI chat and the onCallAssessor method to process the outputs of the LLM.

classdef LLMAssistedSignoffFormTemplate < modelscape.review.app.signoff.SignoffForm %Prototype form for using an abstract "assessor" which could be LLM or %MATLAB sentiment analyser % Copyright 2025 The MathWorks, Inc. properties (Access = protected) Assessor end properties (Access = private, Constant) % number of questionnaire answers to be sent to LLM NumLLMInputs = 3 end properties (SetAccess = protected, Hidden) CallAssessorButton ClearAssessorOutputButton end methods function this = LLMAssistedSignoffFormTemplate(parent) if nargin < 1 parent = uifigure; end this@modelscape.review.app.signoff.SignoffForm(parent); this.Assessor = this.setupAssessor; this.setupFormLayout; end function assessor = setupAssessor(~) assessor = []; end function setupFormLayout(this) this.UIGrid = uigridlayout(this.Parent, [10 4],... 'ColumnWidth', {'1x','1x','1x', '1x'},... 'RowHeight', {25, 100, 100, 25, 25, 175, 25, 25, 175, 25}); import modelscape.review.app.signoff.helpers.formatLabel; import modelscape.review.app.signoff.helpers.formatDropDown; import modelscape.review.app.signoff.helpers.formatTextArea; import modelscape.review.app.signoff.helpers.formatCheckBox; this.Labels{1}= formatLabel(this.UIGrid, 'Major or minor model change',... 1, 1); this.Controls{1} = formatDropDown(this.UIGrid, 1, 2, {'Select', 'Minor', 'Major'}, 'Select'); this.Controls{1}.ValueChangedFcn = @(~,~)this.toggleCallAssessorButton(true); this.Labels{2}= formatLabel(this.UIGrid, 'Model limitations and mitigations',... 2, 1); this.Controls{2} = formatTextArea(this.UIGrid, 2, [2 4]); this.Controls{2}.ValueChangedFcn = @(~,~)this.toggleCallAssessorButton(true); this.Labels{3}= formatLabel(this.UIGrid, 'Model impact',... 3, 1); this.Controls{3} = formatTextArea(this.UIGrid, 3, [2 4]); this.Controls{3}.ValueChangedFcn = @(~,~)this.toggleCallAssessorButton(true); rCallAssessor = this.NumLLMInputs+1; createCallAssessorButton(this, rCallAssessor, [2 4]); rAutoStatus = this.NumLLMInputs+2; this.Labels{rAutoStatus} = formatLabel(this.UIGrid, 'Suggested approval status', rAutoStatus, 1); this.Controls{rAutoStatus} = formatTextArea(this.UIGrid, rAutoStatus, 2); this.Controls{rAutoStatus}.Editable = false; this.Controls{rAutoStatus}.BackgroundColor = this.UIGrid.Parent.Color; rAutoComments = this.NumLLMInputs+3; this.Labels{rAutoComments} = formatLabel(this.UIGrid, 'Suggested comments', rAutoComments, 1); this.Controls{rAutoComments} = formatTextArea(this.UIGrid, rAutoComments, [2 4]); this.Controls{rAutoComments}.Editable = false; this.Controls{rAutoComments}.BackgroundColor = this.UIGrid.Parent.Color; rAssessorButtons = this.NumLLMInputs+4; createClearAssessmentButton(this, rAssessorButtons, 4); copyButton = uibutton(this.UIGrid, ... 'Text', 'Copy Comments', ... 'ButtonPushedFcn', @(~,~)this.onCopyComments); copyButton.Layout.Row = rAssessorButtons; copyButton.Layout.Column = 3; rApproved = this.NumLLMInputs+5; this.Labels{rApproved}= formatLabel(this.UIGrid, 'Approval Status',... rApproved, 1); this.Controls{rApproved} = formatDropDown(this.UIGrid, rApproved, 2,... {'Select', 'Yes', 'No'}, 'Select'); rGeneralComments = this.NumLLMInputs+6; this.Labels{rGeneralComments}= formatLabel(this.UIGrid, 'General Comments',... rGeneralComments, 1); this.Controls{rGeneralComments} = formatTextArea(this.UIGrid, rGeneralComments, [2 4]); rStandardButtons = this.NumLLMInputs+7; createSubmitReviewButton(this, rStandardButtons, 3); createDiscardReviewButton(this, rStandardButtons, 4); end end methods (Access = private) function createCallAssessorButton(this, row, column) this.CallAssessorButton = uibutton(this.UIGrid, ... 'Text', 'Assess',... 'ButtonPushedFcn', @this.onCallAssessor); this.CallAssessorButton.Layout.Row = row; this.CallAssessorButton.Layout.Column = column; end function createClearAssessmentButton(this, row, column) this.ClearAssessorOutputButton = uibutton(this.UIGrid, ... 'Text', 'Clear',... 'ButtonPushedFcn', @(~,~)this.onClickClear); this.ClearAssessorOutputButton.Layout.Row = row; this.ClearAssessorOutputButton.Layout.Column = column; end function toggleCallAssessorButton(this, newValue) this.CallAssessorButton.Enable = newValue; end function onCopyComments(this) this.Controls{9}.Value = this.Controls{6}.Value; end function onClickClear(this) % clear form-specific widgets this.clearAssessorOutputs; this.toggleCallAssessorButton(true); end function onCallAssessor(this, ~, ~) % format assessor call mfiledir = fileparts(mfilename('fullpath')); template = fileread(fullfile(mfiledir, 'promptTemplate.txt')); for i = this.NumLLMInputs:-1:1 questionnaireAnswers{i} = join(string(this.Controls{i}.Value)); end message = sprintf(template, questionnaireAnswers{:}); % call LLM response = generate(this.Assessor, message); % process assessor output if startsWith(response, "```json") response = extractBetween(response, "```json", "```"); response = response{:}; end responseDecoded = jsondecode(response); suggestedPassFail = upper(responseDecoded.Status); comments = [ ... responseDecoded.Comments, ... newline, ... newline, ... 'Feedback questions: ' ... newline]; for i = 1:numel(responseDecoded.Questions) comments = [comments, ... num2str(i), '. ', ...] responseDecoded.Questions{i}, ... newline]; %#ok<AGROW> end % Write LLM outputs into the correct widgets this.Controls{this.NumLLMInputs+2}.Value = suggestedPassFail; this.Controls{this.NumLLMInputs+3}.Value = comments; % Set the 'enabled' status of 'Call LLM' and 'Clear' buttons this.toggleCallAssessorButton(false); this.ClearAssessorOutputButton.Enable = true; end function clearAssessorOutputs(this) this.Controls{this.NumLLMInputs+3}.Value = ""; this.Controls{this.NumLLMInputs+4}.Value = ""; this.ClearAssessorOutputButton.Enable = false; end end end