Signal Regression by Sweeping Hyperparameters

This example shows how to use a preconfigured template in the Experiment Manager app to set up a signal regression experiment involving time-frequency features. The goal of the experiment is to produce a model that can denoise electrocardiogram (ECG) test signals with similar characteristics to those in a training set. The experiment uses the Physionet ECG-ID database, which has 310 ECG records from 90 subjects. Each record contains a raw noisy ECG signal and a manually filtered clean ground truth version [1], [2].

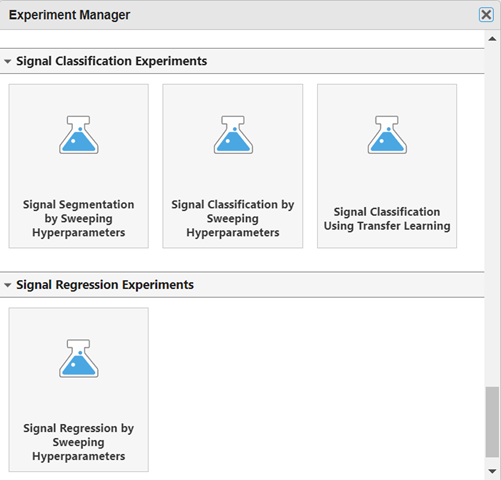

Experiment Manager (Deep Learning Toolbox) enables you to train networks with different hyperparameter combinations and compare results as you search for the specifications that best solve your problem. Signal Processing Toolbox™ provides preconfigured templates that you can use to quickly set up signal processing experiments. For more information about Experiment Manager templates, see Quickly Set Up Experiment Using Preconfigured Template (Deep Learning Toolbox).

Open Experiment

First, open the template. In the Experiment Manager toolstrip, click

New and select Project. In the dialog box,

click Blank Project, scroll to the Signal Regression

Experiments section, click Signal Regression by Sweeping

Hyperparameters, and optionally specify a name for the project folder.

Built-in training experiments consist of a description, an initialization function, a table of hyperparameters, a setup function, a collection of metric functions to evaluate the results of the experiment, and a set of supporting files.

The Description field contains a textual description of the experiment. For this example, the description is:

Signal Regression by Sweeping Hyperparameters

The Hyperparameters section specifies the strategy and

hyperparameter values to use. This experiment follows the Exhaustive

Sweep strategy, in which Experiment Manager trains the network

using every combination of hyperparameter values specified in the hyperparameter table. In

this case, the experiment has two hyperparameters:

layerTypespecifies the first layer of the neural network. The options are:"conv"— Use a 1-D convolutional layer of the kind implemented inconvolution1dLayer(Deep Learning Toolbox)."modwt"— Use a maximal overlap discrete wavelet transform layer of the kind implemented inmodwtLayer(Wavelet Toolbox). The multiresolution analysis provided by this layer has the same resolution as the input signals, which is important in regression tasks."fullyConnected"— Use a fully connected layer of the kind implemented infullyConnectedLayer(Deep Learning Toolbox).

initialLearnRatespecifies the initial learning rate. If the learning rate is too low, then training can take a long time. If the learning rate is too high, then training might reach a suboptimal result or diverge. The options are1e-3and1e-2.

The Setup Function section specifies a function that

configures the training data, network architecture, and training options for the experiment.

To open the Setup Function in the MATLAB® Editor, click Edit. The input to the setup function is

params, a structure with fields from the hyperparameter table. The

function returns four outputs that the experiment passes to trainnet (Deep Learning Toolbox):

dsTrain— A datastore that contains the training signalslayers— A layer array that defines the neural network architecturelossFcn— A mean-squared-error loss function for regression tasks, returned as"mse"options— AtrainingOptionsobject that contains training algorithm details

In this example, the setup function has these sections:

Download and Load Training Data downloads the data files into the temporary directory for the system and creates a signal datastore that points to the data set.

Resize Data resizes the data to a specific input size, if required by your model or feature extraction methods.

Extract Features uses

params.layerTypeto specify the first layer of the neural network. One option is the multiresolution analysis provided by the maximal overlap discrete wavelet transform.Split Data splits the data into training and validation sets.

Read Data into Memory loads all your data into memory to speed up the training process, if your system has enough resources.

Define Network Architecture creates a neural network using an array of layers provided by Deep Learning Toolbox™. For more information, see Example Deep Learning Networks Architectures (Deep Learning Toolbox).

Define Training Hyperparameters calls

trainingOptions(Deep Learning Toolbox) to set the hyperparameters to use when training the network.Supporting Functions includes functionality for reading and resizing the signal data.

The Post-Training Custom Metrics section specifies optional functions that Experiment Manager evaluates each time it finishes training the network. This experiment does not include metric functions.

The Supporting Files section enables you to identify, add, or remove files required by your experiment. This experiment does not use supporting files.

Run Experiment

When you run the experiment, Experiment Manager repeatedly trains the network defined by the setup function. Each trial uses a different combination of hyperparameter values. By default, Experiment Manager runs one trial at a time. If you have Parallel Computing Toolbox™, you can run multiple trials at the same time or offload your experiment as a batch job in a cluster.

To run one trial of the experiment at a time, on the Experiment Manager toolstrip, set Mode to

Sequentialand click Run.To run multiple trials at the same time, set Mode to

Simultaneousand click Run. For more information, see Run Experiments in Parallel (Deep Learning Toolbox).To offload the experiment as a batch job, set Mode to

Batch SequentialorBatch Simultaneous, specify your cluster and pool size, and click Run. For more information, see Offload Experiments as Batch Jobs to a Cluster (Deep Learning Toolbox).

A table of results displays the training accuracy, training loss, validation accuracy, and validation loss values for each trial.

Evaluate Results

To find the best result for your experiment, sort the table of results by validation accuracy:

Point to the Validation Accuracy column.

Click the triangle ▼ icon.

Select Sort in Descending Order.

The trial with the highest validation accuracy appears at the top of the results table.

To record observations about the results of your experiment, add an annotation:

In the results table, right-click the Validation Accuracy cell of the best trial.

Select

Add Annotation.In the Annotations pane, enter your observations in the text box.

For each experiment trial, Experiment Manager produces a training progress plot, a confusion matrix for training data, and a confusion matrix for validation data. To see one of those plots, select the trial and click the corresponding button in the Review Results gallery on the Experiment Manager toolstrip.

Close Experiment

In the Experiment Browser pane, right-click the experiment name and select Close Project. Experiment Manager closes the experiment and the results contained in the project.

Setup Function

Download and Load Training Data

If you intend to place the data files in a folder different from the temporary

directory, replace tempdir with your folder name. To limit the run

time, this experiment uses only 10 randomly chosen subjects. To use more subjects,

increase this

value.

function [dsTrain,layers,lossFcn,options] = Experiment_setup(params) dataURL = "https://physionet.org/static/published-projects/ecgiddb/ecg-id-database-1.0.0.zip"; datasetFolder = fullfile(tempdir,"ecg-id-database-1.0.0"); if ~isfolder(datasetFolder) zipFile = websave(tempdir,dataURL); unzip(zipFile,tempdir) delete(zipFile); end sds = signalDatastore(datasetFolder, ... IncludeSubfolders=true, ... FileExtensions=".dat", ... ReadFcn = @helperReadSignalData); rng("default") subjectIds = unique(extract(sds.Files,"Person_"+digitsPattern)); idx = find(contains(sds.Files,subjectIds(randperm(numel(subjectIds),10)))); sdsSubset = subset(sds,idx); numSignals = length(idx);

Resize Data

If your model or feature extraction methods require no specific signal length, comment out this line.

sdsResize = transform(sdsSubset,@helperResizeData);

Extract Features

Use this function to select the first layer of the neural network. You can choose a 1-D convolutional layer, a fully connected layer, or a maximal overlap discrete wavelet transform layer.

switch params.layerType case "conv" paramsLayer = convolution1dLayer(16,8,Padding="same"); case "fullyConnected" paramsLayer = fullyConnectedLayer(8); case "modwt" paramsLayer = [ modwtLayer('Level',8,IncludeLowpass=true, ... SelectedLevels=1:8,Wavelet="sym2") flattenLayer]; end

Split Data

The experiment uses the training set to train the model. Use 80% of the data for the training set.

The experiment uses the validation set to evaluate the performance of the trained model during hyperparameter tuning. Use the remaining 20% of the data for the validation set.

If you intend to evaluate the generalization performance of your finalized model, set aside some data for testing as well.

[trainIdx,validIdx] = dividerand(numSignals,0.8,0.2); dsTrain = subset(sdsResize,trainIdx); dsValid = subset(sdsResize,validIdx);

Read Data into Memory

If the data does not fit into memory, set fitMemory to

false.

fitMemory = true; if fitMemory dataTrain = readall(dsTrain); XTrain = signalDatastore(dataTrain(:,1)); TTrain = arrayDatastore(vertcat(dataTrain(:,2)),OutputType="same"); dsTrain = combine(XTrain,TTrain); dataValid = readall(dsValid); XValid = signalDatastore(dataValid(:,1)); TValid = arrayDatastore(vertcat(dataValid(:,2)),OutputType="same"); dsValid = combine(XValid,TValid); end

Define Network Architecture

Create a small neural network using an array of layers. The first layer of the

network, paramsLayer, is one of the hyperparameters that the experiment

tests. For regression tasks, use mean-squared-error

loss.

layers = [

sequenceInputLayer(1,MinLength = 1024)

paramsLayer

convolution1dLayer(16,40,Stride=1,Padding="same")

eluLayer

batchNormalizationLayer

convolution1dLayer(16,20,Stride=1,Padding="same")

eluLayer

batchNormalizationLayer

convolution1dLayer(16,20,Stride=1,Padding="same")

eluLayer

batchNormalizationLayer

convolution1dLayer(16,20,Stride=1,Padding="same")

eluLayer

batchNormalizationLayer

convolution1dLayer(16,40,Stride=1,Padding="same")

eluLayer

batchNormalizationLayer

convolution1dLayer(16,1,Stride=1,Padding="same")

eluLayer

batchNormalizationLayer

dropoutLayer(0.2)

convolution1dLayer(16,1,Stride=1,Padding="same")

];

lossFcn = "mse";Define Training Hyperparameters

To set the batch size of the data for training, set

MiniBatchSizeto 50.To use the adaptive moment estimation (Adam) optimizer, specify the

"adam"option.Because the training data has sequences with rows and columns corresponding to channels and time steps, respectively, specify the input data format as

"CTB"(channel, time, batch).To specify the initial learning rate, use

params.initialLearnRate.To use the parallel pool to read the transformed datastore, set

PreprocessingEnvironmentto"parallel".

miniBatchSize = 50; options = trainingOptions("adam", ... InputDataFormats="CTB", ... InitialLearnRate=params.initialLearnRate, ... MaxEpochs=50, ... LearnRateSchedule="piecewise", ... LearnRateDropFactor=0.9, ... LearnRateDropPeriod=10, ... MiniBatchSize=miniBatchSize, ... Shuffle="every-epoch", ... Plots="training-progress", ... Metrics="rmse", ... Verbose=false, ... ValidationData=dsValid, ... ExecutionEnvironment="auto", ... PreprocessingEnvironment="serial"); end

Supporting Functions

helperReadSignalData function. Read signal data from .dat files.

filenameis a string with the file location and name.dataOutis a cell array whose first cell contains noisy signals and whose second cell contains clean signals.infoOutis a structure array that stores the sample rate and other information that you might need.

function [dataOut,infoOut] = helperReadSignalData(filename) fid = fopen(filename,"r"); data = fread(fid,[2 Inf],"int16=>single"); fclose(fid); fid = fopen(replace(filename,".dat",".hea"),"r"); header = textscan(fid,"%s%d%d%d",2,"Delimiter"," "); fclose(fid); gain = single(header{3}(2)); dataOut{1} = data(1,:)/gain; dataOut{2} = data(2,:)/gain; infoOut.SampleRate = header{3}(1); end

helperResizeData function. Pad or truncate an input ECG signal into targetLength-sample segments.

inputCellis a two-element cell array that contains an ECG signal and the ground-truth signal.outputCellis a two-column cell array that contains all the noisy signal segments resized to the same length and the corresponding ground-truth signal segments.

function outputCell = helperResizeData(inputCell) targetLength = ceil(10000/32)*32; outputCell = padsequences(inputCell,2, ... Length=targetLength, ... PaddingValue="symmetric", ... UniformOutput=false); end

References

[1] Lugovaya, Tatiana. "Biometric Human Identification Based on Electrocardiogram." Master's thesis, Saint Petersburg Electrotechnical University, 2005.

[2] Goldberger, Ary L., Luis A. N. Amaral, Leon Glass, Jeffery M. Hausdorff, Plamen Ch. Ivanov, Roger G. Mark, Joseph E. Mietus, George B. Moody, Chung-Kang Peng, and H. Eugene Stanley. "PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals." Circulation. Vol. 101, No. 23, 2000, pp. e215–e220. doi: 10.1161/01.CIR.101.23.e215.

See Also

Apps

- Experiment Manager (Deep Learning Toolbox)

Objects

modwtLayer(Wavelet Toolbox) |signalDatastore|signalMask

Functions

trainnet(Deep Learning Toolbox) |trainingOptions(Deep Learning Toolbox)