sinusoidalPositionEncodingLayer

Description

A sinusoidal position encoding layer maps position indices to vectors using sinusoidal operations. Use this layer in transformer neural networks to provide information about the position of the data in a sequence or image.

Creation

Syntax

Description

layer = sinusoidalPositionEncodingLayer(outputSize)OutputSize

property.

Properties

Sinusoidal Position Encoding

This property is read-only.

Number of channels in the layer output, specified as an even positive integer.

Data Types: single | double | int8 | int16 | int32 | int64 | uint8 | uint16 | uint32 | uint64

This property is read-only.

Positions in the input, specified as one of these values:

"auto"— For sequence or spatial-temporal input, use the temporal indices as positions, which is equivalent to using"temporal-indices". For one-dimensional image input, use the spatial indices as positions, which is equivalent to using"spatial-indices". For other input, use the input values as positions, which is equivalent to using"data-values"."temporal-indices"— Use the temporal indices of the input as positions."spatial-indices"— Use the spatial indices of the input as positions."data-values"— Use the values in the input as positions.

Layer

This property is read-only.

Number of inputs to the layer, stored as 1. This layer accepts a

single input only.

Data Types: double

This property is read-only.

Input names, stored as {'in'}. This layer accepts a single input

only.

Data Types: cell

This property is read-only.

Number of outputs from the layer, stored as 1. This layer has a

single output only.

Data Types: double

This property is read-only.

Output names, stored as {'out'}. This layer has a single output

only.

Data Types: cell

Examples

Create a sinusoidal position encoding layer with an output size of 300.

layer = sinusoidalPositionEncodingLayer(300)

layer =

SinusoidalPositionEncodingLayer with properties:

Name: ''

OutputSize: 300

Positions: 'auto'

Learnable Parameters

No properties.

State Parameters

No properties.

Show all properties

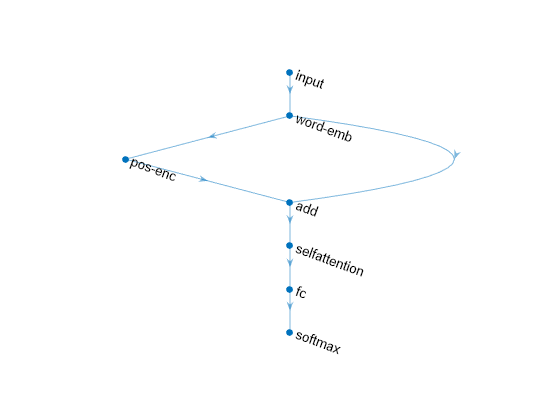

Create a neural network containing a sinusoidal position encoding layer.

net = dlnetwork;

numChannels = 1;

embeddingOutputSize = 64;

numWords = 128;

maxPosition = 128;

numHeads = 4;

numKeyChannels = 4*embeddingOutputSize;

layers = [

sequenceInputLayer(numChannels,Name="input")

wordEmbeddingLayer(embeddingOutputSize,numWords,Name="word-emb")

sinusoidalPositionEncodingLayer(embeddingOutputSize,Name="pos-enc");

additionLayer(2,Name="add")

selfAttentionLayer(numHeads,numKeyChannels,AttentionMask="causal")

fullyConnectedLayer(numWords)

softmaxLayer];

net = addLayers(net,layers);

net = connectLayers(net,"word-emb","add/in2");View the neural network architecture.

plot(net) axis off box off

Algorithms

A sinusoidal position encoding layer maps position indices to vectors using sinusoidal operations. The layer encodes position information of data for transformer neural networks.

The output of the layer has the same number of dimensions as the input. In the output, each vector in position p over the channel dimension is given by:

where p is the position, d is the encoding output

size given by OutputSize and is the wavelength given by:

for .

When Positions is "auto", the layout of the output

depends on the type of data:

For sequence data

Xrepresented by anumChannels-by-numObservations-by-numTimeStepsarray, wherenumChannels,numObservations, andnumTimeStepsare the numbers of channels, observations, and time steps of the input, respectively, the output is anOutputSize-by-numObservations-by-numTimeStepsarrayY, where each vector inY(:,:,t)over the channel dimension is .For 1-D image data

Xrepresented by aheight-by-numChannels-by-numObservationsarray, whereheight,numChannels, andnumObservationsare the height, number of channels, and number of observations of the input images, respectively, the output is aheight-by-OutputSize-by-numObservationsarrayY, where each vector inY(i,:,:)over the channel dimension is .For 2-D image sequence data

Xrepresented by aheight-by-width-by-numChannels-by-numObservations-by-numTimeStepsarray, whereheightandwidthare the height and width of the input image sequences, respectively, andnumChannels,numObservations, andnumTimeStepsare the numbers of channels, observations, and time steps of the input image sequences, respectively, the output is aheight-by-width-by-OutputSize-by-numObservations-by-numTimeStepsarrayY, where each vector inY(:,:,:,:,t)over the channel dimension is .

Layers in a layer array or layer graph pass data to subsequent layers as formatted dlarray objects.

The format of a dlarray object is a string of characters in which each

character describes the corresponding dimension of the data. The format consists of one or

more of these characters:

"S"— Spatial"C"— Channel"B"— Batch"T"— Time"U"— Unspecified

For example, you can describe 2-D image data that is represented as a 4-D array, where the

first two dimensions correspond to the spatial dimensions of the images, the third

dimension corresponds to the channels of the images, and the fourth dimension

corresponds to the batch dimension, as having the format "SSCB"

(spatial, spatial, channel, batch).

You can interact with these dlarray objects in automatic differentiation

workflows, such as those for developing a custom layer, using a functionLayer

object, or using the forward and predict functions with

dlnetwork objects.

This table shows the supported input formats of SinusoidalPositionEncodingLayer objects and the

corresponding output format. If the software passes the output of the layer to a custom

layer that does not inherit from the nnet.layer.Formattable class, or a

FunctionLayer object with the Formattable property

set to 0 (false), then the layer receives an

unformatted dlarray object with dimensions ordered according to the formats

in this table. The formats listed here are only a subset. The layer may support additional

formats such as formats with additional "S" (spatial) or

"U" (unspecified) dimensions.

| Input Format | Positions | Output Format |

|---|---|---|

"CB" (channel, batch) |

| "CB" (channel, batch) |

"SCB" (spatial, channel, batch) |

| "SCB" (spatial, channel, batch) |

"SSCB" (spatial, spatial, channel, batch) | "data-values" | "SSCB" (spatial, spatial, channel, batch) |

"SSSCB" (spatial, spatial, spatial, channel,

batch) | "data-values" | "SSSCB" (spatial, spatial, spatial, channel,

batch) |

"CBT" (channel, batch, time) |

| "CBT" (channel, batch, time) |

"SCBT" (spatial, channel, batch, time) |

| "SCBT" (spatial, channel, batch, time) |

"SSCBT" (spatial, spatial, channel, batch, time) |

| "SSCBT" (spatial, spatial, channel, batch, time) |

"SSSCBT" (spatial, spatial, spatial, channel, batch,

time) |

| "SSSCBT" (spatial, spatial, spatial, channel, batch,

time) |

"SC" (spatial, channel) |

| "SC" (spatial, channel) |

"SSC" (spatial, spatial, channel) | "data-values" | "SSC" (spatial, spatial, channel) |

"SSSC" (spatial, spatial, spatial, channel) | "data-values" | "SSSC" (spatial, spatial, spatial, channel) |

"SB" (spatial, batch) |

| "SCB" (spatial, channel, batch) |

"SSB" (spatial, spatial, batch) | "data-values" | "SSCB" (spatial, spatial, channel, batch) |

"SSSB" (spatial, spatial, spatial, batch) | "data-values" | "SSSCB" (spatial, spatial, spatial, channel,

batch) |

"SS" (spatial, spatial) | "data-values" | "SSC" (spatial, spatial, channel) |

"SSS" (spatial, spatial, spatial) | "data-values" | "SSSC" (spatial, spatial, spatial, channel) |

"SU" (spatial, unspecified) |

| "SCU" (spatial, channel, unspecified) |

"BU" (batch, unspecified) |

| "CBU" (channel, batch, unspecified) |

"UU" (unspecified, unspecified) |

| "CUU" (channel, unspecified, unspecified) |

"UUU" (unspecified, unspecified, unspecified) |

| "CUUU" (channel, unspecified, unspecified,

unspecified) |

"UUUU" (unspecified, unspecified, unspecified,

unspecified) |

| "CUUUU" (channel, unspecified, unspecified, unspecified,

unspecified) |

"UUUUU" (unspecified, unspecified, unspecified,

unspecified, unspecified) |

| "CUUUUU" (channel, unspecified, unspecified, unspecified,

unspecified, unspecified) |

In dlnetwork objects, SinusoidalPositionEncodingLayer objects also support

these input and output format combinations.

| Input Format | Positions | Output Format |

|---|---|---|

"CT" (channel, time) |

| "CT" (channel, time) |

"SCT" (spatial, channel, time) |

| "SCT" (spatial, channel, time) |

"SSCT" (spatial, spatial, channel, time) |

| "SSCT" (spatial, spatial, channel, time) |

"SSSCT" (spatial, spatial, spatial, channel, time) |

| "SSSCT" (spatial, spatial, spatial, channel, time) |

"BT" (batch, time) |

| "CBT" (channel, batch, time) |

"SBT" (spatial, batch, time) |

| "SCBT" (spatial, channel, batch, time) |

"SSBT" (spatial, spatial, batch, time) |

| "SSCBT" (spatial, spatial, channel, batch, time) |

"SSSBT" (spatial, spatial, spatial, batch, time) |

| "SSSCBT" (spatial, spatial, spatial, channel, batch,

time) |

"ST" (spatial, time) |

| "SCT" (spatial, channel, time) |

"SST" (spatial, spatial, time) |

| "SSCT" (spatial, spatial, channel, time) |

"SSST" (spatial, spatial, spatial, time) |

| "SSSCT" (spatial, spatial, spatial, channel, time) |

"TU" (time, unspecified) |

| "CTU" (channel, time, unspecified) |

References

[1] Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. "Attention is all you need." In Advances in Neural Information Processing Systems, Vol. 30. Curran Associates, Inc., 2017. https://papers.nips.cc/paper/7181-attention-is-all-you-need.

Version History

Introduced in R2023b

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Seleccione un país/idioma

Seleccione un país/idioma para obtener contenido traducido, si está disponible, y ver eventos y ofertas de productos y servicios locales. Según su ubicación geográfica, recomendamos que seleccione: .

También puede seleccionar uno de estos países/idiomas:

Cómo obtener el mejor rendimiento

Seleccione China (en idioma chino o inglés) para obtener el mejor rendimiento. Los sitios web de otros países no están optimizados para ser accedidos desde su ubicación geográfica.

América

- América Latina (Español)

- Canada (English)

- United States (English)

Europa

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)