Mars Sample Fetch Rover: Autonomous, Robotic Sample Fetching

Raul Arribas, Airbus Defence and Space

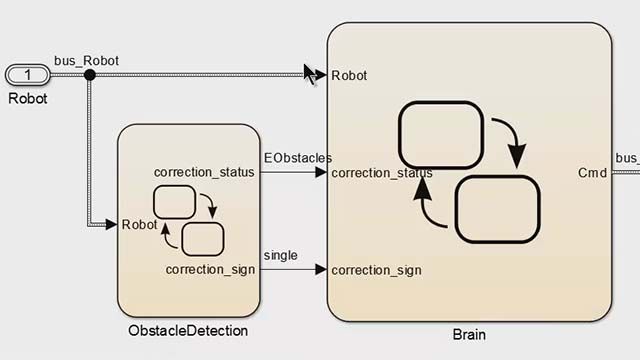

As part of the Mars Sample Return (ESA-NASA) mission, Airbus Defence and Space UK is developing the Mars Sample Fetch Rover. The rover's role is to autonomously drive and pick up samples left by the Perseverance rover on the Mars surface. This presentation will focus on the robotics at the front of the rover (camera systems and robotic arm) that are used to identify, grasp, and stow these samples (without human in the loop). This system is designed in MATLAB® and Simulink® and then translated into C code for flight software using the autocode packages.

Published: 28 May 2022