Space Industry Safety Regulation and Software Engineering Standards

Lewis McCluskey, Southern Launch

Nathan Drummond, Southern Launch

Overview

Safety and risk management are paramount considerations for companies developing systems for launch vehicles and space craft. Not only for ensuring a safe launch and return, but also to comply with certifications in line with engineering standards, such as NPR 7150.2 (NASA-Software Engineering Requirements) and ECSS-E-ST-40C (ECSS-Software Engineering).

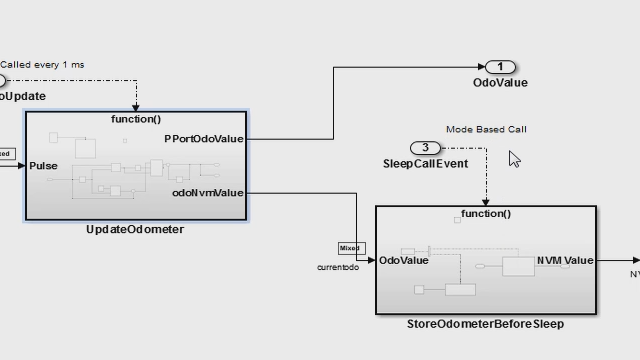

This presentation provides an overview of applying Model-Based Design to develop space software based on space software engineering standards, as well as a case study from Australian start-up Southern Launch, who have developed Rocket Flight Safety Analysis tools and Space System applications using built-in MATLAB functionality. The goal of this presentation is to provide sufficient background of the overall process, starting from requirements and following the development process all the way through verification of the object code on a target processor.

Highlights

- Learn the overall development process of software development in the context of space software engineering standards.

- Discover how Model-Based Design enables space software development from to verification of the object code on a target processor from a case study.

- Hear how Southern Launch developed their Rocket Flight Safety Analysis Tool to assess danger areas, compute the expected number of casualties, and generate exclusion zones for a launch.

About the Presenters

Lewis McCluskey is a senior launch engineer at Southern Launch and has led the development of Southern Launch’s rocket range safety analysis process. Lewis works alongside some of the largest rocket manufacturers in the world to understand how their rockets could be safely launched in Australia. He has developed a strong understanding of rocket technologies, and how safe rocket launches can be carried out. Lewis studied Aerospace Engineering at the University of Adelaide, graduating in 2019.

Nathan Drummond graduated with a degree in aerospace engineering and business management in 2021 from RMIT University, Melbourne. Nathan gained practical experience in rocket design in his role as the aerostructural team lead of a university rocketry club that competed in 2019 Australian Universities Rocket Competition (AURC). Nathan led the winning team that successfully launched a sounding rocket with a scientific payload onboard to 30,000 ft and recovered it intact. To further adapt his skills, Nathan spent time abroad in Germany, where he gained experience as a thermal engineer working on the Ariane series of launch vehicles. Nathan has since joined the Southern Launch team in his role as a Launch Engineer and has a keen interest to develop and shape the future of Australian Space launch.

Alex Shin is a Principal Application Engineer at MathWorks. He specialises in helping customers in the area of simulation, verification and automatic code generation, primarily for commercial projects. His recent work includes defining simulation and verification process and implementation of Model-Based Design tools in large organisations. Mr. Shin received Bachelor’s degree from University of New South Wales in mechatronics engineering.

Recorded: 30 Mar 2022