What's New in MATLAB for Finance Professionals

From the series: Using MATLAB in Finance 2020

MATLAB® has significant capabilities that are relevant to the financial services industry. See the tools in the MATLAB development environment that you can use to bring your ideas to reality. Topics include bringing data into MATLAB, making sense of your data, newly supported financial analysis algorithms and trading capabilities, advanced analytics, using a consolidated platform for modeling, developing code, effective collaboration techniques, and sharing your models.

Highlights:

- Creating and backtesting investment strategies in MATLAB

- Using MATLAB for interpretable machine learning

- Accessing MATLAB through your web browser

- Deploying MATLAB apps and models to systems both on-premise and in the cloud

Published: 28 Jul 2021

Hi. This is Bob Meindl from MathWorks, and I want to thank you for joining us for a session entitled What's New in MATLAB for Finance Professionals? Just a quick housekeeping item. If you have any questions, please use the Q&A window and not the chat window.

We don't look at the chat window quite as much, so please put them in the Q&A. If we don't get to your question in line during the meeting, we'll have some time at the end. If we don't get to it then, we'll make sure and get back to you. So with that, what I would like to do is turn it over to Greg McGean, the account manager for financial services. Greg? Greg, I think you might be muted.

Oh, there we go. Good afternoon, everyone. My name is Greg McGean. I'm one of the account managers for the finance industry in North America. Bob went through most of the logistics, but I just wanted to add that this presentation is about an hour and many of these topics can be expanded upon. So if there are some topics you might be interested in and maybe want to take a deeper dive, please let me know. I'd be happy to forward your information, your questions to the appropriate account manager so they can follow up. So thanks again and enjoy.

Steve, take it away.

Awesome. So thank you so much, guys. So my name is Steve Notley. And I'm an application engineer at The MathWorks, and I specialize in helping our customers in the financial services industry. And so what I do is really talk to the customers about what kinds of problems they're working on, what kind of interesting applications they have for our tools, and help them find a way that they can use a combination of our tools to really address those pressing problems in industry.

So today what we're going to be talking about is a lot of the new features in MATLAB over the last couple of releases up to the current release of R2020a, and we're going to be talking about how those new features can help address some of these pressing problems that we've seen in the finance space here. And so as Greg said, this is kind of a little bit of a sampler of a lot of different topics. So if there's anything that you're really interested in or you think is pertinent for you, definitely contact us and we're happy to set up a time to talk in more detail about any one of these topics. So definitely don't be afraid to reach out. And with that, let's get into it.

So our agenda for today is going to be some of this new functionality grouped where we think they provide value for you as you're addressing some of these pressing problems. And so today we're going to start with, how can you develop as fast as you think? And so the crux of this question really is something that motivates a lot of what we do at MathWorks in a lot of how we engineer our products, and that is the central question of how can I get my idea from just being an idea to being a prototype with as little friction as possible? We want to make sure that the time between you waking up with a brilliant idea in your head and you having a prototype that you can show to people is as little as possible.

And so the way that we are really addressing this and helping you to lower that barrier is by adding a lot of new functionality to our development environment to make sure that it's an easy, seamless experience when you're expressing all of these ideas. And so rather than stay in slides for this, I think that this is something that I'd rather show in MATLAB itself. And so you can see MATLAB here, and what I have here is our live editor. And so those of you who maybe haven't used MATLAB in a little while and are used to authoring .m files, this might look a little bit different than when you saw it last. And so our live editor is the environment chiefly used for authoring what we call live scripts, and those are these .mlx files. As you can see, I have one open here.

And so the live editor and live scripts have been around for quite a few releases now. Probably five years or so. But we've been adding a lot of functionality, really making it robust, and expanding on what's available to make this development process as seamless as possible for you. And I definitely recommend checking it out as the amount of functionality has gotten so great in our lives scripts that I really don't use regular .m files for the vast majority of my development anymore. It's just easier to work in an environment like this.

And so what are live editor really is and what live scripts really are is more of a notebook-based environment for you to express your ideas. And so I'm going to step through an example script here to show you what the capabilities are. So I'm going to run this first of all, and then I'll kind of explain the different features as they come up.

So what we have here is a script to perform kind of a basic portfolio optimization. In terms of how this looks, you can see that the script has formatted text. It has things like a table of contents, and it has sections of code that can be run independently. And you're going to see here the output for running these sections of code appears in line.

So for this first step for our portfolio optimization, we're bringing data in from an Excel sheet that we have. So we have data for prices from a bunch of different index funds from various countries. And you can see that output in line here.

But something that sets live scripts apart is that the output isn't just static in line. So I can fully interact with this, I can scroll, I can right click on this, copy the output, or convert it to data types right here in my script. So these aren't just static outputs. These are things that I can interact with in order to actually change the functionality of this script here.

And as a next step we go ahead and plot these price signals, and we can see for our plots it's the same way. This isn't static. I can go ahead and zoom in on a section of this if I wanted to. And I can see that as I do that, the live editor actually suggests the snippets of code that I need here.

And I can choose to actually update this code and push those changes right back into the code in order to have it show up this way the next time that I run this section. So I can really interact with this. I can manipulate these outputs in any way that I see fit.

So going ahead with my portfolio optimization example, I calculate my returns, I get my mean and covariance, and then I go ahead and use our portfolio framework to set up the actual optimization. And I'll talk a little bit more about the portfolio framework later on. And once I've done all that basic stuff, I can go ahead and add our group constraints.

So we're going to group our assets into three groups, and you can see here that I have these little sliders that correspond to these bounds here. And so of course I can just program these normally, but one of our newer features in our live editor is the ability to insert controls like this into your scripts. And these can be found right here at the top of the screen you can see there's a little section that has controls. And if I drop that down, you can see I have a whole bunch of different things that I can insert here these little controls to make my scripts interactive. And so the rest of the script goes on to plot this efficient frontier, show my optimal portfolio weights that sit on that frontier, and then finally choose an optimal portfolio based on the maximum Sharpe ratio.

So where something like these live controls really come in handy, I think, is if I want to do something like pass this over to my validation team so that they can explore different inputs for my algorithm and see how this changes the output space. So I can go ahead and actually hide the code here to make this really just a fully-- more like a lightweight app out of my notebook, and I can play with these constraints and see how my efficient frontier updates, how my optimal portfolio options update, and how all of this reacts in line. And so this is a really powerful way to enable people to kind of easily explore your code as well as easily document your train of thought and the steps that you took as you're going through an analysis.

So alongside our controls, you may have noticed there is another kind of new section up here called tasks. And so live tasks are something that we've incorporated into recent versions of MATLAB. They're a newer feature for us. And the idea here is that if I click on these, if you can see that I have tasks for a lot of little components that I probably do pretty often. Things like cleaning missing data or joining tables.

And the idea behind these is that we found that people were spending a lot of time doing these little things that are kind of not unique to a workflow. Things like anytime you're doing data exploration, you probably start with doing things like cleaning missing data and cleaning outlier data. And so rather than spending time rewriting that code every time or having the same 10 or 15 lines of code that you take around with you to all these different projects, we want you to basically be able to wave your hand and say, make this thing happen. It's baseline enough that we should be able to do that.

And so I'm going to open another script here that deals with load forecasting so you can see some of these at work. So I'm going to load in some data around the load that we see on the New York ISO electrical grid. The type of data that you might use for something like energy pricing or just straight load forecasting.

And so I load in this data set, and like I said before, at the beginning of these kinds of data analysis workflows I probably want to clean my data or remove my outliers. And so we can go ahead and use live tasks for that. So you can see one that I've inserted here around cleaning missing data, and all I have to do is select my input data, specify any options that I want. So here I have it to fill missing data. We can say we want to do this linear interpolation, and this will go ahead and run this value, give me a nice plot to show me exactly what it did, and return my clean data, as well.

And you can see here in this next section we also have one for outlier data that does the same thing. So I want to interpolate my outliers using linear interpolation, and we can get another nice visual of what has been corrected for us. And if I at any time want to see the code that actually underpins these, I always have the option to show controls and code as well so that I can get a sense of what does this actually do under the hood, and what goes into the visual, and things like that. So I definitely recommend checking out live tasks to kind of automate some parts of these workflows. Let me switch back to slides here.

So in the same way that we have live tasks to automate portions of a lot of workflows, we also have these things called apps in MATLAB that have been around for quite a while that are really designed to kind of do the same thing, but for entire specific workflows. So things like machine learning or database access automated end to end. And I'm going to be showing a couple of these in later on demonstrations as part of other categories, as well.

So moving on to our data. And so data is essential to a lot of the kinds of problems that we solve today, and we always find people asking a question that looks a lot like this. How can I spend more time adding value and less time juggling all of my data?

And what do we mean by juggling data? We mean how am I going to get it into my environment to do my computation on it? How am I going to format and what data types am I going to use to express these? Things like that. We don't want that to be a part of something that you have to really spend a lot of time worrying about. We want you to spend that time adding value, doing your job rather than worrying about how you're going to handle these kind of ends of the workflow here.

And MATLAB has a lot of options for getting data into it no matter how you have it stored. So if you have data in a file, or in many files we have an import tool that allows you to bring this in it with one click essentially. It also allows you to generate code to read similar files. So you can have MATLAB automatically write kind of a reader function for files like this.

If you have code in a database, we have the ability to author SQL queries and execute them directly in line in MATLAB code. And we also have an app that allows you to interactively manipulate these tables, and it'll construct SQL queries for you automatically. And you can execute them that way, as well. And if you have NoSQL databases, if you have like a Hadoop database or another NoSQL framework, we have a support for a variety of those, as well. So definitely contact us about that, too.

And finally, if you need live data, MATLAB can connect to a host of live data feeds. So like Bloomberg, FactSet, Reuters, whatever that you're using, we have support through a bunch of partner companies that allow you to get these data feeds directly into MATLAB. And so once you've gotten your data in, it's important to express it in the right way. So going over a couple of data types that are not brand new, but that have come out in the last few releases and that we definitely recommend checking out if you're not using them already.

And so one of these is tables, which is really good for mixed type or tabular format data. And it gives you a lot of flexibility when it comes to indexing into and organizing your data. Similarly in finance, obviously timestamps are very important. And our datetime data type allows you to have a lot of functionality built on top of these timestamps.

So this is a really rich and expressive data type that allows you to do a lot of nice things like arithmetic with timestamps. So an example of that would be subtracting two timestamps to figure out how much time has passed between them. They'd also automatically account for things like time zones and daylight savings, and just makes working with timestamps a little bit easier.

And then kind of combining these two is timetables, and this has really been-- had a big impact when working with financial data and working on financial problems. And it kind of, like I said, it combines kind of the best of these other two. So it has all of that flexibility of tables, and it's governed by timestamp data and these datetime values that serve as kind of the primary key for the data that's in this table. And so we build a lot of powerful functionality on top of this, like the ability to aggregate and re-time timestamp data.

So if you have daily data and you want to aggregate all the values up to, say, a monthly value, that is very easy to do with timetables. It's also easy to do things like synchronize two different timetables and retime them so that they have a common sample time. Things like that. So if you aren't using these data types, I'd definitely recommend checking them out as they make life a lot easier when you're dealing with financial data.

So another challenge that we see becoming increasingly more common in the financial services space is dealing with big data. These days, a lot of data is often the starting point for doing types of complex analysis. And so if you have data that doesn't fit into memory, and sometimes that can be a lot of work. In some languages, that means writing your own chunking algorithm, deciding how you're going to bring sections of the data into memory, deciding how you're going to change your algorithms to make it safe to operate on smaller sections at a time. And it can really transform a mundane task into something that is a little bit more complex.

In MATLAB we try to abstract that away from you and take care of this under the hood so that you don't have to worry so much about the particulars here. So in MATLAB, the change between one file and 100 files is really these three lines of code. We have data types set up such that you can really keep all of your analysis, all of the core functionality the same, and just change a little bit how you set up and ultimately gather your data into memory in order to make this flow fluidly with data of really any size. And so I can jump into MATLAB and give you an idea of what it feels like to work with big data in MATLAB.

So we'll open up this example script here. And so you can see what I have here, the first command that I have that's a little bit different that's part of this big data workflow is this data store command. And now what this essentially does is create a pointer to our repository of data. So for this example, I'm going to be doing this with just one CSV file. But this could be any number of CSV files. I could throw a star in here and it would treat all CSV files of this pattern as one big data repository.

Similarly, I could have this point at a database, or I could have it point at an S3 bucket sitting somewhere in the cloud. It's a really flexible in terms of what you can point at and use as a data repository. And so this is the first step-- creating that pointer of that datastore is the first step in this big data workflow.

And so I can go ahead and create that. And we can see I can get an idea of some of the settings here, and I can preview what my data looks like here. And then I can do things like set what columns of this table I actually want to operate on to pare it down a little bit, and then I can actually create my tall table data type. And so that's done using this tall command. This is the second command for this big data workflow.

And this is kind of where the magic happens, because the output of this is what's called a tall table. But I can really treat this just like a normal table. I can use a lot of the same functionality. I can do all the things that I would expect to be able to do on a table that is in memory. I can do all of those things just as easily in a tall table scenario.

And so I can see the output here is an m by 6 tall table. And so it's m because we don't exactly know how tall it is, right? That's what makes it-- it's not in memory yet, and that's how we're dealing with this big data.

And so from there, like I said, I can do a lot of the same functionality. So I can copy another variable and create kind of a subset of this as one of the columns. I can do things like compute my mean and standard deviation and do arithmetic with the outputs of these. And now you'll notice the outputs look a little bit different. They're just these question marks here because the computation is deferred until you bring it into data-- into memory, sorry.

Finally, once I'm done, I can use our last keyword that's part of this, which is this gather command. And now this is where MATLAB really does some legwork to make sure that your code is performant on big data, as well. Because what this is going to do is look at all of the commands that you've queued up to run on your tall table. It's going to map them to figure out, how can I perform all of these operations with the fewest passes through the data as possible? And then it's going to use parallel computing to paralyze that process whenever possible as it's running all those computations and bringing that data into memory.

And this can lead to some really significant performance gains when you're dealing with data of a really large magnitude here. So running this, you can see it will tell me what parallel resources it's using, it'll tell me how many passes it's going to need, and it'll give me a summary of how quickly it completes these. And finally, give me the results kind of as I would expect here.

And so all of this is, like I said before, things that I can do on a regular table. And I can really do a lot of these operations without having to really think about the fact that my data might be out of memory. This code has now been made basically big data safe and I don't have to worry too much about the magnitude of data that underpins this.

And so that is our changes in terms of the functionality that we've added for dealing with big data. So let's talk a little bit about some of our finance-specific functionality that we've added here. So a lot of our finance functionality fits into a few different toolboxes that are meant for kind of specific areas of computational finance.

And so the general question that we see a lot here is, I have these financial tasks that are commonplace-- these things that I do every day-- and I want to do them in a way that's fast and in a way that I can potentially automate, as well. And so one of our products for this is our financial tool box which has a lot of functionality around asset allocation as well as some basic risk management and pricing functionality, among other things. And so some of our recent updates here is the ability to do constrained portfolio optimization with a few-- we've added a few additional constraints as of late such as the ability to set the minimum and maximum number of assets. And we've expanded these integrality constraints to allow for use and mean variance, MAD, and CVaR portfolio optimization. And the use of this framework is what we saw in that first example during portfolio optimization.

Among that, we have a bunch of new simulation methods and some new methods for default probability modeling, as well. And I definitely recommend checking out the examples that you see here at the bottom. Especially the Black Litterman portfolio optimization and the HRP examples. A lot of people have really done a lot of interesting research with those, so I definitely recommend checking those out.

Going a little bit deeper into the pricing and hedging side of things, we have our financial instruments toolbox that allows you to design and price a variety of kinds of financial instruments. And so we've added a lot of functionality this release around different kinds of options and different pricing methods, tree-based methods, closed form solutions, et cetera. But the biggest change is this first one that you see up here. We've really converted this all to an object oriented framework for pricing your instruments.

So as you can see in the diagram over here, we now have a general instrument and pricer object that you can use. And as an argument of those, you specify the type of instrument and pricer that you want to use. Same for your ray curve and model objects that feed into those. And so your code here that used to look fairly different for these different kinds of object-- sorry, these different kinds of instruments and these different kinds of pricers will now look a lot more similar, and you can reuse a lot of that code and easily change it for different pricing applications. So I definitely recommend checking that out if you're doing this kind of pricing work.

On the econometrics and time series forecasting side, we've added some powerful new time series forecasting models, some additional tests and feature selection options. And one big feature that we added a few releases ago that has definitely really impacted how we do econometric modeling and time series modeling is the econometric modeler app. And so I can jump into MATLAB to give you a sense of what this looks like.

So this will be the first of the kind of apps that I mentioned before that we're going to see here today. And so I have some data from the S&P 500 that I can load into MATLAB here. You can see it's gone into this index variable. And so I can go ahead and open that up, and you can see it's just a straight column variable that shows the S&P 500 values over time.

And so from there, I can go over to my apps tab. And these are all the apps that are available in MATLAB that correspond to all those different workflows that we can make really easy and interactive. So as you can see, there's a lot of them for a lot of different application areas. And I definitely recommend if you've never looked at this checking these out to see if there's any that correspond to things that you work with a lot. Because they can probably save you some time and make your life a little bit easier the next time that you're going through that workflow.

For our purposes, we're going to use the econometric modeler right here. And so we can open that up. This popped up on my other monitor here.

And so from here, we can start by bringing our data into this workspace here. So we can import our index data, and we can see the plot come up here. So this is my S&P 500 value over time. And so from here, I can really go through my full time series modeling workflow.

So if I want to do a test for stationarity as a first step, I can go into my tests here, select a KPSS test, and run that. Although we're pretty sure it's not stationary, and we can see that that is confirmed by the results of the test. So as a result, maybe I want to difference this. So I can see there's a button here for differencing, and I can go ahead and use that to difference my time series here. And if I want to run that test again to confirm, I can do that and see that this time it seems to indicate that it is stationary, which is what we'd expect.

From there, I can take a look at my ACF, PACF plots, and do any other diagnostics that I might want to see as I'm going through this process of figuring out how I'm going to construct this model what kind of model might be able to accurately capture this time series. And so having done all that, we can go ahead and actually fit a model to this. And so I can see through this job down here all of the kinds of models that are available to me, and for this for now we're just going to do a simple ARIMA model.

And so when I click on that, this is going to give me the option to choose all my parameters here. So we did one degree of integration, and let's say we want two autoregressive and two moving average orders. This will show me the equation here, and then I can go ahead and estimate it.

And this will actually go ahead and fit this ARIMA model for me. And it will show me how well it fit and give me a bunch of important measures like the goodness of fit, the actual parameters that it estimated, and a plot of the residuals. And from here, if I want to go ahead and do some diagnostics, I have a bunch of things that I can look at here.

So if I want to do a residual histogram, that can pop up here, as well. And we can see as expected, this is pretty well distributed around 0, which is what we kind of expect to see here. And then once I'm done doing this process, in reality, I would obviously run more tests. I would probably try out a few different kinds of models and examine how a few of them looked and how well they performed on this data. But once I've done all of that, I probably end up with a model that I feel comfortable with that I want to use for my time series forecasting ultimately.

And once I've reached that point, I can go up to my export section here, and I have a few options. I can just export that model and any other variables that I want to my workspace to forecast or work with it however else I want. I can also generate a function to go through all of this and actually refit that model at any point in the future that I want, and this is something that I'll talk more about a little bit later.

And one thing that I really like about the econometric modeler is this last option here, the ability to generate a report. And so I'm going to go ahead and do that for now. And I can choose what I want to include in my report here and then click OK. And I'm just going to call this my report.

And what this is actually going to do is go through all of the things that I've done, all of the plots that I've made, all the tests that I've performed, all the way up through fitting the model and doing that residual analysis. And it will run all of those, display all the mathematics, display all of the visual outputs, and it will compile all of those things into a PDF style report that I can use to really capture my whole workflow, my thought process, and make sure that I have an artifact of the steps that I took when performing this time series analysis. And so this is loading now. This just popped up on my other monitor.

So we can see this PDF that's a result of this, and it goes through kind of all the same steps that we did. We can see the expression of the KPSS test and the results, the results of our differencing, the plots that we created, and ultimately the equation, and statistics, and plot of our model fit as well as our residual diagnostics here. So this is really powerful for capturing and documenting your workflow as you're doing these time series analysis. So I will close out of this for now.

And so again, I definitely recommend checking this out if you do this kind of work. Going into the functionality that MATLAB has for risk management, we have a lot of options for corporate and consumer credit risk as well as market risk. We have the ability to model probability of defaults using copulas, we have the ability to create credit score cards for your consumer credit risk, and we also have a full back testing framework that contains a wide suite of models for back testing your value at risk and expected shortfall models, as well.

And finally on the trading front, we have functionality that allows you to execute trades directly from MATLAB using our trading tool box. And some new functionality in recent releases here has been the incorporation of transaction cost analysis and the ability to do that and also to run calculations to determine the optimal volume and the minimum possible transaction costs for your trades before you execute them. And we've also incorporated support for wind data feed services, as well

And so moving on from some of our kind of baseline financial modeling, let's talk about how we can think in models. And so a question that we hear a lot of interest in around this area is how can I jump into advanced analytics if I'm new to MATLAB? So maybe I'm comfortable using traditional financial models or I'm really comfortable using optimization or machine learning, whatever it may be. But if I'm new to MATLAB, some of these techniques can be fairly daunting or they're fairly complicated, and it can be a lot of overhead to learn some of these things in a new language. And so the question is, how can we make this as easy as possible in MATLAB?

And one of the beauties of MATLAB here is that we offer a consolidated platform for all of these kinds of analysis. So whether you're doing traditional financial analysis or your baseline mathematical analysis optimization regression all the way up to more cutting edge or research-based techniques-- machine learning, deep learning, natural language processing, whatever it may be-- we have one platform that you can use for all of these things. So that you can experiment with these different kinds of techniques, figure out how they work, and see what works best for you. And on top of that, we offer really easy, really interactive workflows for a lot of these things, as well.

So as an example of this, let's take a look at what it looks like to go through a machine learning workflow in MATLAB. So what I can do here is take some credit rating data that I have, and so we'll open this up in MATLAB. And you can see I have some numeric and categorical indicators, and ultimately what I have here is a bond rating.

And so maybe I have data like this from a rating service, and I want to reverse engineer this rating engine to be able to use a rating engine on things that may not have offers for these ratings by big rating companies. Maybe I have private equity or non-public companies that I want to value in this way or provide ratings for in this way, and so I want to create my own engine. And maybe I want to use machine learning to do this.

So I have data like this. I have data to jumpstart the process, and I can go ahead and use our import tool here to bring this into MATLAB from the Excel sheet where it's living now. And then ultimately, I have my newdata.mat file here that I'm going to load up. And if I take a look at this, I can see it looks pretty much the same as the data we were just looking at. It just doesn't have a rating yet. So my goal is going to be to provide ratings for this list of assets, as well.

So to kick off my machine learning workflow, we're going to again be using one of these apps that you just saw. So we have a whole category of apps here for machine learning and deep learning to help walk you through these workflows, and so today this is a classification problem. So we're going to be looking at the Classification Learner. And we have a companion app that looks and feels very similar for regression-based problems, as well.

So let's go ahead and open up the Classification Learner for this. This will start up a session here. I can begin a session by bringing in my data from my workspace here. So I have my credit rating data. I can specify that I want to predict rating, and then we can see it displays all my predictors here, and I can select or deselect any that I want. So for example here, ID is not going to really correlate with any of these variables since it's just a numeric recordkeeping identifier. So I can deselect that so that it's not part of my analysis.

I can choose a method for validation here, as well, in order to prevent over fitting on my data. So I'm just going to leave this at the standard recommendation, and you can read more about validation here, too, if you're wondering how this works and what this does. But ultimately, this is kind of what's going to be used for our model selection.

And so I can start this session here, and the first thing that's going to do is bring my data in and plot it for me. So it's going to show me what these predictors look like by class. So I can see all of my color coded classes here, and I can see how this data looks with different combinations of these. See how my classes seem to be split up as combinations of these predictors. Maybe based on this, I could do some feature selection if I like, or principal component analysis, or specify some misclassification costs.

And once I've done those pre-modeling steps, I can go ahead and start to train models here. So you can see when I open this drop-down menu here, I have a large menu of different kinds of classifiers available to me here. So if I want to start off with maybe all of my tree based models, I can select that and go ahead and press train here. So you can see it'll train these here, and you might notice it's training a few of these at the same time. And that is because I have this use parallel button selected up here.

And so pressing that has basically told MATLAB, I have know some hardware resources. I want you to use any parallel resources that I have to make this as fast as possible. And so MATLAB then is going to pick up the four cores that I have on my laptop here and realize that it can train up to 4 models at a time on these four cores, and it's going to do exactly that.

So it's trained to these three tree models. I can throw maybe a couple of ensemble models in there for good measure just to experiment with some different kinds here and see what the results are. And then as results kind of begin to come in for these, I can start to take a look at how these performed here. So for example, if I look at this model here, I can see down in the bottom left hand corner here I have some baseline results like accuracy, the total cost that I incurred, prediction speed, training time-- things that are important as I'm selecting a model here.

And I also have a bunch of graphical tools at my disposal. So if I want to see how this performed at a class by class level, I can open up my confusion matrix and get a sense of how that performed here. And so looking at these, we can see, for example, that this particular model performed really well on triple-a rated bonds, but it suffered on double b rated bonds here it looks like. So depending on where I want to place my emphasis, this may be some information I use to select different models, or maybe incorporate misclassification costs to steer my model back to being on track. Things like that.

So once I've done this analysis, a common next step is to then tune the parameters of these models. So these models were trained using some kind of baseline features, but I might want to go into the particular features of a model and change some of these parameters to see how this impacts my training. And so in MATLAB, we have now the ability right within this app to actually tune these using an optimization routine.

So if I say I'm most comfortable with tree models, I want to choose a tree that I can optimize the hyperparameters for that tree. You see I have an optimizable tree option here. So I can go ahead and click that. And when I start the training for this, what this is actually going to do is perform a Bayesian optimization routine over the space of all the possible hyperparameters for these tree type models.

So this is going to perform a pretty efficient form of optimization over these in order to train a bunch of these different tree models with these different parameter combinations and see what actually performs best, what has the lowest accuracy on the particular training set that I'm dealing with. So we'll give this a moment to finish up here. And ultimately, when this is finished, what we'll have is a fully tuned machine learning model.

So this performs 30 iterations here, and we can see that I have this tuned model performs a little bit better than the best of the trees here. And let's say this is the model that I want to go with. I'm happy with this and I want to use this for my rating engine.

So from there, I have a few options here. I can export this model directly to my workspace, which I'm going to go ahead and do. And we're just going to call it trained model. That'll be fine.

But I have a couple of other options that I have, as well. And we see again here this generate function button that I promised before that I would get into, and we're going to use that here. So what this serves to do is that when I'm going through a workflow interactively like this, it's great. It makes my life easy, but it does beg the question of, how am I going to automate this and really bring this into a production setting?

And so if I click this generate function button, what this is actually going to do is generate a bunch of code that will actually programmatically perform a lot of the things that I did interactively. So you can see my feature selection being done here. You'll notice the ID that deselected is missing here. The optimized parameters that this chose are incorporated here. It performs the training, and ultimately it performs the cross validation and gives me the statistics around that, as well.

So this really replicates this whole training kind of workflow that I just interactively outlined and allows me to take this code and and integrate it into my production settings so that I can say, hey, every time I get this new chunk of data, retrain this. Give me the latest version of this model. And so getting back to our base workspace here, you can see we have our trained model in my workspace. And so I can go ahead and use it to classify these new data points. And we have our ratings for the assets that we wanted.

And so taking a moment to step back and think about what we did here, I can be a machine learning expert and not have a ton of experience in MATLAB, and I will pretty easily be able to work my way through this. Because what we did is just import our data, we performed feature selection, we performed model selection, we tuned our hyperparameters using an optimization routine, we examined the results and generated code that we would be able to integrate to periodically retrain this model, and we did all of that without writing a single line of MATLAB code. I only wrote this one line to ultimately classify this new data. And so this speaks to my ability using MATLAB to really get into these workflows and really be able to think at the level of modeling, and at the level of optimization, at the level of machine learning without tripping over individual lines of code along the way.

So we can clean this up here. And in a similar way to these apps that we have to make machine learning a little bit simpler, we have similar apps to try to make deep learning as accessible as possible. So the first of a pair of apps that we have for this is our deep network designer, and this is going to allow you to visualize and interactively create deep networks here. And so you can see here I'm going to open up a pre-trained model in this video, and we can visualize this model, see how the layers interconnect, and actually drag and drop new layers, tweak options all within that view.

Once I've done that, I can go ahead and import training data. Select all of my training options and actually interactively train this and keep an eye on the results as I'm training this deep network, as well. And so that's to help you design these networks. But at a more kind of meta level, a higher level here, the process of solving a problem using deep learning is a really experimental, really iterative process.

So we have one of our newer apps here is our experiment manager, and this allows you to try out different network architectures, different parameter options and see how they perform across your test data here and compare contrast these methodologies. So we can see here we have a bunch of different experiments, different networks that are training with these different options here, and we can in real time monitor the training progress and see the results for any one of these experiments. And using MATLAB code, we can programmatically set up these experiments to specify the various architectures that we want to try out or the grids of training options that we want to explore. And then from that, MATLAB will automatically construct experiments with this various grid mixing and matching all of these various options that I want in order to see how these different experiments pan out and observe the results from there.

And so MATLAB really represents a full ecosystem for deep learning. We have tools to do things like labeling data as well as tools to import and export your networks from a variety of other popular frameworks, through the ONNX framework here for importing and exporting these neural networks. And from Keras-Tensorflow and Caffe you can bring those networks directly into MATLAB without even having to go through ONNX. On top of that, we have the ability out of the box to do multi GPU training for your deep networks, and the ability to generate high performance C, C+ or Cuda code for inference, as well.

Something else that comes up a lot in this context is the ability to get the most out of your hardware by using parallel computing, and MATLAB does have a lot of options from this all the way from parallel enabled toolboxes, functions that you can just specify used my parallel resources like we saw with the Classification Learner app. And MATLAB under the hood will decide how to parallelize that, all the way down to doing your own parallel programming or even doing some pretty low level parallel programming and specifying things like message passing, as well. So something for everybody kind of from just flipping a switch to having parallelism turned on all the way down to some really advanced parallel programming techniques for your more seasoned parallel programmers.

Similarly, in our newest release we have thread based parallel pools as well as process-based parallel pools, and this does reduce memory usage and allows for faster scheduling and less data transfer for some applications. We also have the ability to scale to clusters and clouds. So if you wanted to spin up one of these clusters on AWS or Azure, that's something that can be very easily done in MATLAB. And as I mentioned before, we have support for GPU computing. Deep networks will train on GPUs out of the box, and we also have the ability for matrix math explicitly to be done on GPU.

So we've talked to a little bit about how we design these different kinds of models, but at the end of the day these are part of a larger software engineering project. Which begs the question, how can I write clean, robust code if I have a lot of people that I'm working with and need to really orchestrate this effort? Right and MATLAB, what it really is is a fully featured software engineering environment.

So on top of all the fancy things that we've seen that make your life a little bit easier, these interactive workflows, it also has a lot of the familiar software engineering features that as a programmer you're expecting to see and that you really need to do your job. And that means having things like class structures and unit testing frameworks. And it also means having support for DevOps task. So making sure that it works seamlessly with your CI/CD workflows and things like source control.

And so our unit testing framework has a fully featured testing framework, and it's one that works with continuous integration servers. We have our performance testing framework as well to see how performant and how efficient your code is as well as a framework for testing your visual applications. And new in our latest release we also have a Jenkins plug-in for incorporating your workflow with Jenkins and doing things like automating, building, and testing and reports that result from that.

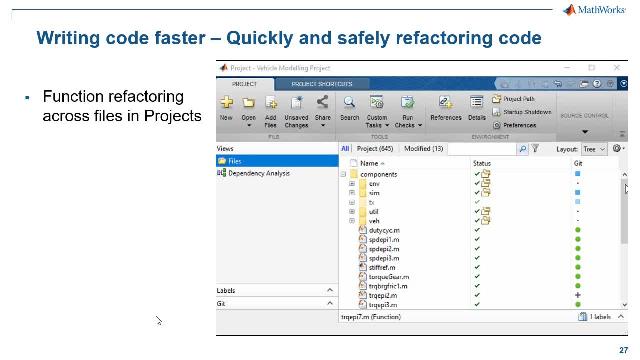

So when collaborating with other MATLAB developers, a big key here is a feature that came out in 19a called MATLAB Projects. And this allows you to do a lot of nice things like configure a development environment, do your dependency analysis, integrate with source control. And let's jump into MATLAB to see what that looks like.

So you can see here, I have a folder that has a few different scripts here. These are different value at risk models and different ways to calculate that. And I also have some functionality that this depends on-- data, models, helper functions, tests. And this is all wrapped up in our project here. And so in a scenario where I've inherited this from somebody else, I probably want to open up this project in order to get started.

So if I click on that, MATLAB will go ahead and start up this project. It'll add all of these things to my path that I need, and it'll do things like run any automated scripts to set up my environment that I specify when I'm creating this project and things like that. So from here, if I'm trying to get a sense of this code, I can go into my dependency analyzer and run a dependency analysis to see how these things relate to one another. And I can get a sense of that here and see this in a graphical fashion.

Similarly, you may have noticed when I went into this project view here, I had a bunch of options come up here related to Git. So this is already something that I've linked to Git. And so I have the ability from my MATLAB environment to actually manage these Git base workflows.

So for example here, if I went back to my normal value at risk calculation and I wanted to add a 97% calculation to this and I can go ahead and save this. Now you'll notice that in my project workflow, in my Git column here, you can see that I now have a blue square next to this to indicate that I have an uncommitted change here. And so I can go ahead and make that commit directly from here, say I added a 97% var, and it will make this commit. This will go back to green. I can push it from there if I want, or I can just inspect my various branches here and see my submit history, as well.

And so this makes it pretty easy to pass around and collaborate with others these various projects. So we can jumping back into PowerPoint here. Another feature of projects that is definitely really important is it helps with upgrading to latest and greatest version of MATLAB. So if you have some older code, you want to see if it's possible for you to upgrade to a new version to take advantage of some new functionality, you can wrap this code in a project and run the upgrade check.

And what this will do is look for anything that may have changed between versions to see if your code will run in this most recent version or if it needs some changes in order to run correctly. And it'll notify you of what needs to be changed, point you to documentation to make that change to make sure that you're on the right track. And in scenarios where it's a one to one change, it's a pretty clear way to swap this out. It'll actually automatically make those changes in code for you and alert you to that, as well. So if you see something that you want in a newer release, definitely recommend checking this out as a way to make that transition easier.

And finally, we want to see how we can collaborate and share here. And so this begs the question, what's the best way for my colleagues to access my models? And so one way that we can easily share our models with colleagues who want a more interactive interface who maybe aren't programmers is to use our app designer apps.

And so MATLAB has App Designer, which is a way to create these fully featured graphical apps in order-- so this can be done in a drag and drop fashion and also by using MATLAB code to specify what happens under the hood here. And so let's jump into an App Designer app and take a look at what this process looks like. So if I go ahead and type App Designer at the command window here, this will go ahead and open up App Designer.

And so I can start with a blank app here, and this is kind of what the beginning of any app authoring process looks like. And I can drag and drop my various components here. So if I wanted to plot something, I can drag an axes over here. I can drag a push button over here. And I can-- let's say I want to make this plot something. So I can rename this plot.

And so as easy as that, I can specify what I want my app to look like. In terms of specifying what actually happens when I interact with this thing, I can do that by specifying what's called callback functions. And so if I can right click here, go down to my callbacks, I can have the option to specify, hey, what happens when I actually push this button? And I can do that, and you'll see it'll bring me to the code view here.

And you can see MATLAB's already generate a bunch of UI-based code for me that specified all of the graphical parts, and I just have to specify my callback functions up here. So if I wanted to make this plot something, I can go ahead and specify I want this to plot. I want it to do it on that axis that I just created, and we'll have this plot let's say 10 random lines.

So I can save that and run this here. And just like that, I've created kind of a simple app here. And when I click my button, you can see it does as I would expect. And this is obviously just a toy example, but you can really explore a lot of advanced functionality that's available here to make really robust dashboards and other visualization tools here, as well.

Once you've created your apps, sharing them is as easy as going to the Share button that you see over here. And you can see I have the option to packaged up as a MATLAB app, and this is for giving it to other MATLAB users. I can create a standalone desktop app, and this will be an executable that I can share even with people who don't have MATLAB installed. And finally, I can actually even share this as a web app that'll make it available over my internet connection for anyone to access right in a web browser.

And so sharing as a web app is a new capability, and it's something that has really opened a lot of doors for people who want to share their MATLAB-based visual applications. And this also supports authentication so that you can control access to these, as well. If you have more functional code that you want to share, so something that doesn't have a GUI in front of it, we have options to deploy this kind of functional code to other languages doing things like compiling libraries for Python or Java. Even compiling Excel add-ins so that your colleagues using other languages, other tools can get the full functionality out of your MATLAB code, as well.

We also have the ability to integrate MATLAB analytics into a full production enterprise environment. And that means the ability to spin up a server, deploy your MATLAB code to run as a RESTful endpoint that can be accessed by people from anywhere over the open internet, as well. And so this is something that has definitely been a gain changer for a lot of companies for sharing their code for opening up access. So definitely we're always happy to talk about this if you're have an interest in this, as well.

And as you would expect, you can also use MATLAB from a variety of other programming languages and also call those languages from MATLAB, as well. And finally, I realize we're right up against time here, but I do want to take a moment to talk a little bit about the services that we offer. We offer customized training courses that can be delivered by one of our training engineers to help you get up and running on specific topics in MATLAB, and we also have a full consulting arm that allows you to tackle problems that have a shorter timeline with the full backing of MATLAB engineers. And in an effort to keep this from being a black box solution, our consultants also train your engineers on how the solution works and how to maintain it in the future.

And that is all that I have today. And so if you have any questions, you can feel free to contact us. And I realize we're right at time, but I can look and see if there is a question or two I can take live now.