| Todos los algoritmos |

Algorithm | Escoja entre 'trust-region-dogleg' (opción predeterminada), 'trust-region' y 'levenberg-marquardt'. La opción Algorithm especifica una preferencia sobre qué algoritmo utilizar. Se trata únicamente de una preferencia porque, para el algoritmo trust-region, no se puede subdeterminar el sistema de ecuaciones no lineales; es decir, el número de ecuaciones (el número de elementos de F devuelto por fun) debe ser, al menos, el mismo que la longitud de x. De forma similar, para el algoritmo trust-region-dogleg, el número de ecuaciones debe ser el mismo que la longitud de x. fsolve utiliza el algoritmo Levenberg-Marquardt cuando el algoritmo seleccionado no está disponible. Para obtener más información sobre cómo elegir el algoritmo, consulte Seleccionar el algoritmo. Para configurar algunas opciones de algoritmo utilizando optimset en lugar de optimoptions: Algorithm: establezca el algoritmo en 'trust-region-reflective' en lugar de 'trust-region'.

InitDamping: configure el parámetro inicial λ de Levenberg-Marquardt estableciendo Algorithm en un arreglo de celdas como {'levenberg-marquardt',.005}.

|

CheckGradients | Compare las derivadas proporcionadas por el usuario (gradientes de objetivo o de restricciones) con las derivadas de diferencias finitas. Las opciones son true o la opción predeterminada false. Para optimset, el nombre es DerivativeCheck y los valores son 'on' u 'off'. Consulte Nombres de opciones actuales y existentes. La opción CheckGradients se eliminará en una versión futura. Para comprobar las derivadas, utilice la función checkGradients. |

| Diagnóstico | Muestre información de diagnóstico sobre la función que se desea minimizar o resolver. Las opciones son 'on' o la opción predeterminada 'off'. |

| DiffMaxChange | Cambio máximo en variables para gradientes de diferencias finitas (un escalar positivo). La opción predeterminada es Inf. |

| DiffMinChange | Cambio mínimo en variables para gradientes de diferencias finitas (un escalar positivo). La opción predeterminada es 0. |

Display | Nivel de visualización (consulte Visualización iterativa):

'off' o 'none' no muestran salida alguna.

'iter' muestra la salida en cada iteración y emite el mensaje de salida predeterminado.

'iter-detailed' muestra la salida en cada iteración y emite el mensaje de salida técnico.

'final' (opción predeterminada) solo muestra la salida final y emite el mensaje de salida predeterminado.

'final-detailed' solo muestra la salida final y emite el mensaje de salida técnico.

|

FiniteDifferenceStepSize | Factor de tamaño de paso de escalar o vector para diferencias finitas. Cuando establece FiniteDifferenceStepSize en un vector v, las diferencias finitas progresivas delta son delta = v.*sign′(x).*max(abs(x),TypicalX);

sign′(x) = sign(x) excepto sign′(0) = 1. Las diferencias finitas centrales sondelta = v.*max(abs(x),TypicalX);

FiniteDifferenceStepSize se expande a un vector. La opción predeterminada es sqrt(eps) para diferencias finitas progresivas y eps^(1/3) para diferencias finitas centrales. Para optimset, el nombre es FinDiffRelStep. Consulte Nombres de opciones actuales y existentes. |

FiniteDifferenceType | Las diferencias finitas, utilizadas para estimar gradientes, son o bien 'forward' (opción predeterminada), o bien 'central' (centradas). La opción 'central' requiere el doble de evaluaciones de función, pero debería ser más precisa. El algoritmo respeta escrupulosamente los límites cuando estima ambos tipos de diferencias finitas. De este modo, por ejemplo, podrá seleccionar una diferencia regresiva en lugar de progresiva para evitar realizar la evaluación en un punto fuera de los límites. Para optimset, el nombre es FinDiffType. Consulte Nombres de opciones actuales y existentes. |

FunctionTolerance | Tolerancia de terminación en el valor de la función, un escalar positivo. La opción predeterminada es 1e-6. Consulte Tolerancias y criterios de detención. Para optimset, el nombre es TolFun. Consulte Nombres de opciones actuales y existentes. |

| FunValCheck | Compruebe si los valores de la función objetivo son válidos. 'on' muestra un error cuando la función objetivo devuelve un valor complex, Inf o NaN. La opción predeterminada, 'off', no muestra ningún error. |

MaxFunctionEvaluations | Número máximo de evaluaciones de función permitidas, un entero positivo. El valor predeterminado es 100*numberOfVariables para los algoritmos 'trust-region-dogleg' y 'trust-region', y 200*numberOfVariables para el algoritmo 'levenberg-marquardt'. Consulte Tolerancias y criterios de detención y Iteraciones y recuentos de la función. Para optimset, el nombre es MaxFunEvals. Consulte Nombres de opciones actuales y existentes. |

MaxIterations | Número máximo de iteraciones permitidas, un entero positivo. La opción predeterminada es 400. Consulte Tolerancias y criterios de detención y Iteraciones y recuentos de la función. Para optimset, el nombre es MaxIter. Consulte Nombres de opciones actuales y existentes. |

OptimalityTolerance | Tolerancia de terminación en la optimalidad de primer orden (un escalar positivo). La opción predeterminada es 1e-6. Consulte Medida de optimalidad de primer orden. Internamente, el algoritmo 'levenberg-marquardt' utiliza una tolerancia de optimalidad (criterio de detención) de 1e-4 veces la FunctionTolerance y no utiliza OptimalityTolerance. |

OutputFcn | Especifique una o varias funciones definidas por el usuario a las que una función de optimización llame en cada iteración. Pase un identificador de función o un arreglo de celdas de identificadores de función. La opción predeterminada es ninguno ([]). Consulte Sintaxis de función de salida y función de gráfica. |

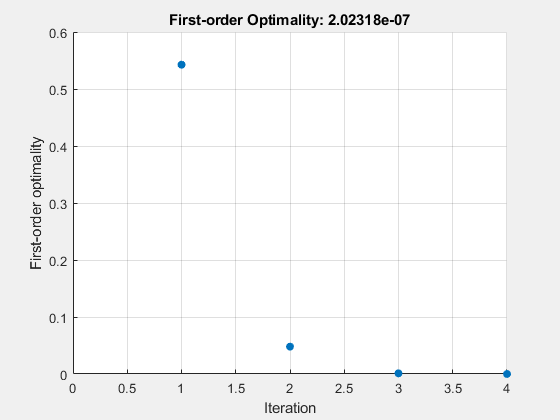

PlotFcn | Representa varias medidas de progreso mientras el algoritmo se ejecuta; seleccione una de las gráficas predefinidas o escriba la suya propia. Pase un nombre de función de gráfica integrada, un identificador de función o un arreglo de celdas de nombres de función de gráfica integrada o identificadores de función. Para funciones de gráfica personalizadas, pase identificadores de función. La opción predeterminada es ninguno ([]):

'optimplotx' representa el punto actual.

'optimplotfunccount' representa el recuento de la función.

'optimplotfval' representa el valor de la función.

'optimplotstepsize' representa el tamaño de paso.

'optimplotfirstorderopt' representa la medida de optimalidad de primer orden.

Las funciones de gráfica personalizadas utilizan la misma sintaxis que las funciones de salida. Consulte Funciones de salida para Optimization Toolbox y Sintaxis de función de salida y función de gráfica. Para optimset, el nombre es PlotFcns. Consulte Nombres de opciones actuales y existentes. |

SpecifyObjectiveGradient | Si true, fsolve utiliza una matriz jacobiana definida por el usuario (definida en fun) o información jacobiana (cuando se utiliza JacobianMultiplyFcn) para la función objetivo. Si false (predeterminada), fsolve aproxima la matriz jacobiana utilizando diferencias finitas. Para optimset, el nombre es Jacobian y los valores son 'on' u 'off'. Consulte Nombres de opciones actuales y existentes. |

StepTolerance | Tolerancia de terminación en x, un escalar positivo. La opción predeterminada es 1e-6. Consulte Tolerancias y criterios de detención. Para optimset, el nombre es TolX. Consulte Nombres de opciones actuales y existentes. |

TypicalX | Valores x típicos. El número de elementos en TypicalX es igual al número de elementos en x0, el punto de inicio. El valor predeterminado es ones(numberofvariables,1). fsolve utiliza TypicalX para escalar diferencias finitas para la estimación de gradientes. El algoritmo trust-region-dogleg utiliza TypicalX como los términos diagonales de una matriz de escalado. |

UseParallel | Cuando true, fsolve estima gradientes en paralelo. Deshabilite la opción estableciéndola en la opción predeterminada, false. Consulte Cálculo paralelo. |

| Algoritmo trust-region |

JacobianMultiplyFcn | Función de multiplicación de matriz jacobiana, especificada como un identificador de función. Para problemas estructurados a gran escala, esta función calcula el producto de la matriz jacobiana J*Y, J'*Y o J'*(J*Y) sin formar J. La función tiene el formato donde Jinfo contiene datos utilizados para calcular J*Y (o J'*Y, o J'*(J*Y)). El primer argumento Jinfo es el segundo argumento devuelto por la función objetivo fun, por ejemplo, en Y es una matriz que tiene el mismo número de filas que dimensiones hay en el problema. flag determina qué producto se calcula:

En cada caso, J no se forma explícitamente. fsolve utiliza Jinfo para calcular el precondicionador. Consulte Pasar parámetros adicionales para obtener información sobre cómo proporcionar valores para cualquier parámetro adicional que jmfun necesite. Nota 'SpecifyObjectiveGradient' debe establecerse en true para que fsolve pase Jinfo de fun a jmfun.

Consulte Minimization with Dense Structured Hessian, Linear Equalities para ver un ejemplo similar. Para optimset, el nombre es JacobMult. Consulte Nombres de opciones actuales y existentes. |

| JacobPattern | Patrón de dispersión de la matriz jacobiana para diferenciación finita. Establezca JacobPattern(i,j) = 1 cuando fun(i) dependa de x(j). De lo contrario, establezca JacobPattern(i,j) = 0. En otras palabras, establezca JacobPattern(i,j) = 1 cuando puede tener ∂fun(i)/∂x(j) ≠ 0. Utilice JacobPattern cuando no sea conveniente calcular la matriz jacobiana J en fun, aunque pueda determinar (por ejemplo, inspeccionándolo) cuándo fun(i) depende de x(j). fsolve puede aproximar J mediante diferencias finitas dispersas cuando proporciona JacobPattern. En el peor de los casos, si la estructura es desconocida, no establezca JacobPattern. El comportamiento predeterminado es como si JacobPattern fuera una matriz densa de unos. Entonces, fsolve calcula una aproximación completa de diferencias finitas en cada iteración. Esto puede ser muy costoso para problemas grandes, por lo que normalmente es mejor determinar la estructura de dispersión. |

| MaxPCGIter | Número máximo de iteraciones PCG (gradiente conjugado precondicionado), un escalar positivo. La opción predeterminada es max(1,floor(numberOfVariables/2)). Para obtener más información, consulte Algoritmos de resolución de ecuaciones. |

| PrecondBandWidth | Ancho de banda superior del precondicionador para PCG, un entero no negativo. La opción predeterminada de PrecondBandWidth es Inf, lo que implica que se utiliza una factorización directa (Cholesky) en lugar de los gradientes conjugados (CG). La factorización directa es más costosa computacionalmente que CG, pero produce un paso de mejor calidad hacia la solución. Establezca PrecondBandWidth en 0 para precondicionamiento diagonal (ancho de banda superior de 0). Para algunos problemas, un ancho de banda intermedio reduce el número de iteraciones PCG. |

SubproblemAlgorithm | Determina cómo se calcula el paso de iteración. La opción predeterminada, 'factorization', realiza un paso más lento, pero más preciso que 'cg'. Consulte Algoritmo trust-region. |

| TolPCG | Tolerancia de terminación en la iteración PCG, un escalar positivo. La opción predeterminada es 0.1. |

| Algoritmo Levenberg-Marquardt |

| InitDamping | Valor inicial del parámetro de Levenberg-Marquardt, un escalar positivo. La opción predeterminada es 1e-2. Para obtener más detalles, consulte Método de Levenberg-Marquardt. |

| ScaleProblem | 'jacobian' puede, en ocasiones, mejorar la convergencia de un problema que no esté bien escalado. La opción predeterminada es 'none'.

|